Wow, 4 years ago, I had a first look at Remote Desktop Sharing of a Wayland desktop of Ubuntu 22.04. At the time it was apparent that Wayland would replace X-windows in the medium term and I needed a solution for remote help when X-windows was no longer an option. At the time, I found it working overall, but the main two drawbacks was a strange delay of the last typed two characters and the inability to access a locked screen. As the next Ubuntu LTS version (26.04) is about to be released just about a month down the road, I thought I’d have another go at it and have a look at how Wayland remote desktop sharing works on the current LTS version, 24.04.

Continue reading Wayland, Remote Desktop Sharing and Ubuntu 24.04 – Better Than on 22.04?Network Printing with Linux – IPP, Raster, JPEG and PDF

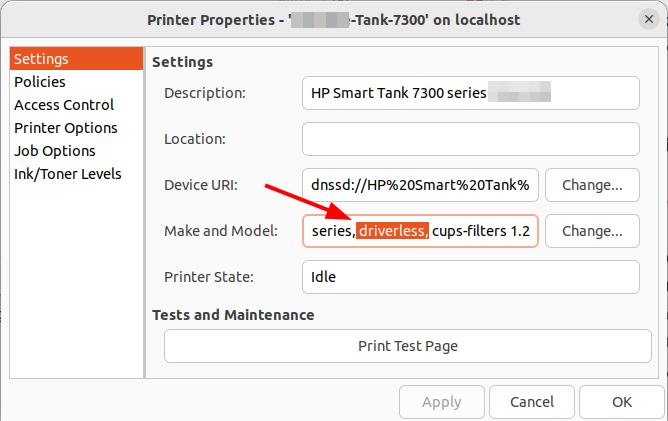

Hm, perhaps a bit of a boring headline, but as my new printer worked out of the box without any configuration required, I was wondering how this could work, as Ubuntu 22.04 I currently use is older than the printer model.

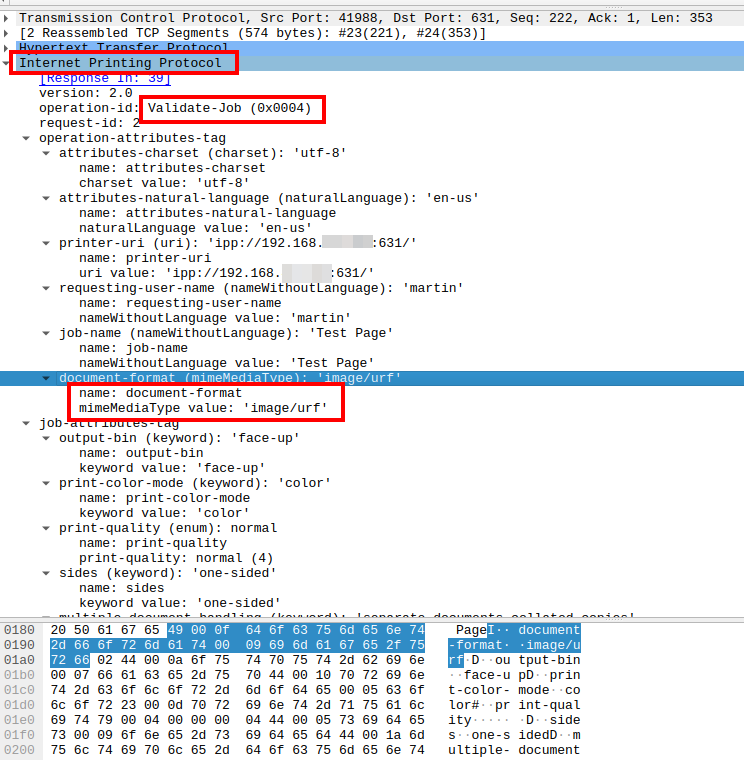

As my printer replacement cycle exceeds a decade, I was not quite up to date on this topic anymore and after digging into the topic, it seems that there are two main reasons it was working out of the box on Linux: Apple picked up and evolved the CUPS printing specs used by Linux already for some time, and all operating systems, including Android/iOS started using the Internet Printing Protocol (IPP) as a standardized way to talk to printers. The result: No manufacturer drivers required anymore. OK fine, I thought, so how does it work?

Continue reading Network Printing with Linux – IPP, Raster, JPEG and PDFHP Smart Tank 7307 – Driverless Printing and Scanning with Linux

All right, after having had a look at the printing costs of my new HP Smart Tank 7307 ink printer / scanner combination in the previous post, here’s a follow up on how easy it is to get printing and scanning working with a Linux notebook in 2026. And to make things a bit more difficult: I’m a bit behind the times as I still use Ubuntu 22.04 LTS from back in 2022, so it’s unlikely to have the latest software and drivers. So I worried a bit that getting a printer to work that was most likely released after this date would be a bit of a hassle.

Continue reading HP Smart Tank 7307 – Driverless Printing and Scanning with LinuxPrinters with Ink Tanks – Putting the Fun Back into Printing with Ink

It’s 2026 and the HP OfficeJet 6950 I bought in 2018 and used with my Linux devices ever since has reached the end of its life. As I have used it only very little, the ink lines or print heads are so clogged that even a repeated 3-step cleaning process could not make it work again. Over the years, I have used the printer very little due to the high cost of the original replacement ink cartridges that would not last very long. Using cheaper 3rd party cartridges was a hit or miss over the years and hence not much of a fun experience.

So due to all of this, I preferred to use a black and white laser printer for the faster speed and lower cost per page for most of my printing needs. What I used quite frequently, however, was the scanner unit on top of the ink printer. As the printer was not of much use anymore, I was thus tempted to just buy a new scanner-only unit and only use my black and white laser printer going forward.

While researching scanner and printer prices and features, however, I noticed that Epson, HP and others are now offering a wide variety of color ink printers with large ink tanks and deliver ink for 6.000 color and 12.000 black and white pages as part of the original package. This significantly changes the equation for me, as printing with ink will thus become significantly cheaper and produce much less waste compared to my black and white laser printer.

Continue reading Printers with Ink Tanks – Putting the Fun Back into Printing with InkFeedback Request: Which Document Formats Does Your Printer Understand?

I recently bought a new printer and I’m currently looking a bit into how printers are detected and how page data is sent to the printer. Why? Just out of interest and to understand how modern printing works over the local network. I have three HP manufactured printers at home, but I would also like to understand the type of document formats printers of other manufacturers understand. So if you are running Linux and have a printer in the local network (not connected via USB), would you mind to look up the document format capabilities of your printer and let me know? On the shell this is quite easy:

# Detect all printers in the local network

#

$ ippfind -l

ipp://YYYYYYYYYY:631/ipp/print idle accepting-jobs none

# YYYYYYYYY is the IP address or domain name of the printer

#

$ ipptool -tv ipp://YYYYYYYYYYYY:631/ipp/print get-printer-attributes.test | grep "document-format\|printer-make-and-model"

The ipptool command then returns the model name and document formats supported. I’d be very grateful if you could post the result in the comments below. Many thanks in advance!

And to give you an idea how this looks like, here’s the output returned by my new printer:

printer-make-and-model (textWithoutLanguage) = HP Smart Tank 7300 series

document-format-default (mimeMediaType) = application/octet-stream

document-format-supported (1setOf mimeMediaType) = application/vnd.hp-PCL,image/jpeg,image/urf,image/pwg-raster,application/PCLm,application/octet-stream

document-format-version-supported (1setOf textWithoutLanguage) = PCL3GUI,PCL3,PJL,Automatic,JPEG,AppleRaster,PWGRaster,PCLM

document-format-details-default (collection) = {document-format=application/octet-stream}

document-format-varying-attributes (1setOf keyword) = copies,hp-color-working-spaces-supported

Wayland and Remapping Characters on the Keyboard

Yes, I know, I am a few years late with this but it is very likely that for the next desktop iteration of the notebooks in the family, Wayland is going to be the display compositor. The X-Server is on the way out. I don’t really mind as long as I have the features I require. One of those features which are a bit special is that one member of the household requires special French characters to be mapped on a German keyboard layout to Alt-Gr + one normal key. Particularly: ‘ç ï ë œ ÿ’. Yes, it’s the keyboard and not the display, but the X-Server and Wayland handle the keyboard, too. Unfortunately, the solution I have for X-Server environments doesn’t work with Wayland anymore. So I had to find a new way.

Continue reading Wayland and Remapping Characters on the KeyboardXMPP Large File Transfers via STUN/TURN

A bit of a special topic today, but I wasn’t aware about this before: For small files and videos, my XMPP server uses HTTPS and a local file storage at the server side to store and forward files. The file size limit is configurable and by default 20 MB. This suffices for 99% of my use cases. However, what about larger files, e.g. videos?

Continue reading XMPP Large File Transfers via STUN/TURNDNS SRV Records to the Rescue

Like everyone else I do have technical debt or stumble over things when changing my infrastructure that I didn’t see coming. Recently, it hit me when I wanted to change the physical location of one of my Prosdy XMPP servers I use for personal communication. Getting the XMPP server VM to a bare metal host at another physical site was done in a few minutes and at first I thought my plan worked nicely. The warm feeling didn’t last very long, however.

Continue reading DNS SRV Records to the RescueSeparating Workspaces in Ubuntu

For years, I’ve been using several workspaces, i.e. virtual desktops to distribute different kinds of work to different virtual desktops and then changing quickly between them with the mouse or keyboard shortcuts. While this was working, I always felt a bit hampered by the dock on the left side always showing dots for all open windows next to their icons, even if those windows were on different desktops. It thus happened quite a lot that I inadvertently changed between desktops because I thought a window was open on one workspace when it was actually open in another. Recently, I got a bit fed up and I wondered if there wasn’t a hack to only get dots in the dash next to the app icons for windows open in the current workspace. It turns out that no hack is required, one can switch to ‘Include applications from the current workspace only’ in the ‘Multitasking’ section of the system settings. Works great in Ubuntu 22.04 and also 24.04! I can’t believe I didn’t find this earlier. For me, this is a huge productivity gain and it just requires clicking on single radio button!

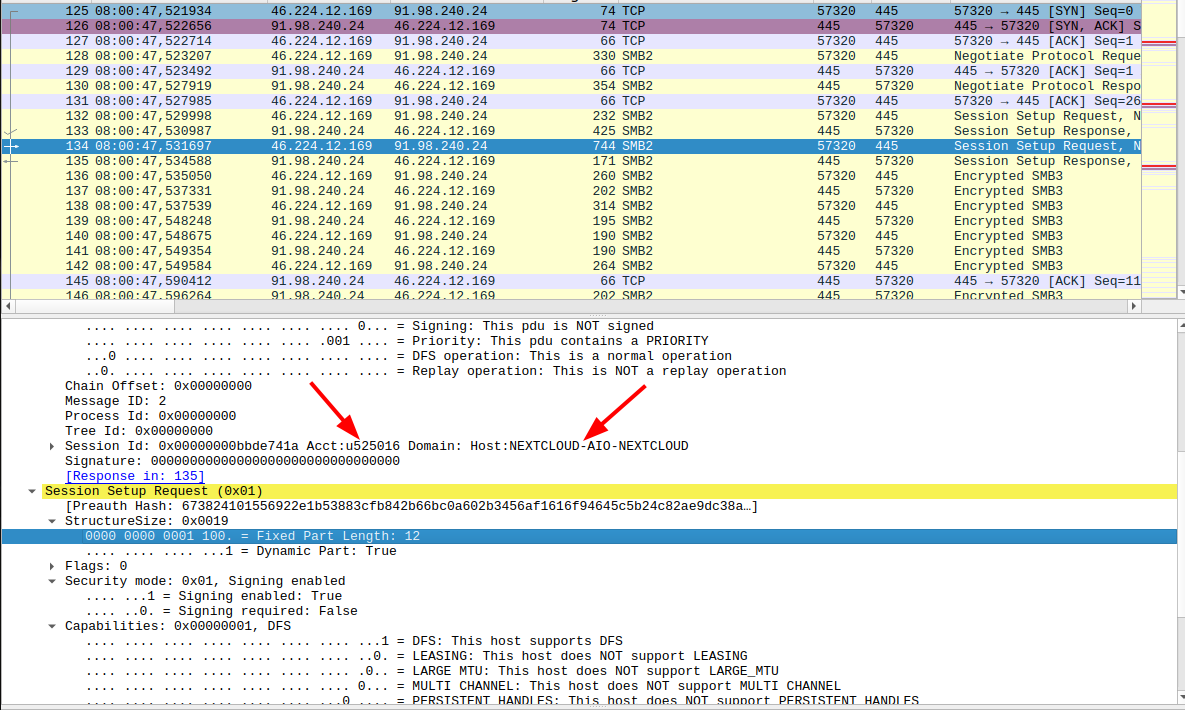

Nextcloud and a Quick SMB Trace

And a quick follow up to a previous post on using Nextcloud ‘external’ storage on another host over an SMB network mount: SMB / CIFS seems to have been a bit ‘insecure’ in the past, so using it over the Internet was not recommended. However, recent versions of SMB, particularly version 3, seems to have the necessary authentication and encryption features to make this feasible. I was curious of course so I decided to run a tcpdump on my Nextcloud machine to see how the traffic to my SMB server looks like.

Continue reading Nextcloud and a Quick SMB Trace