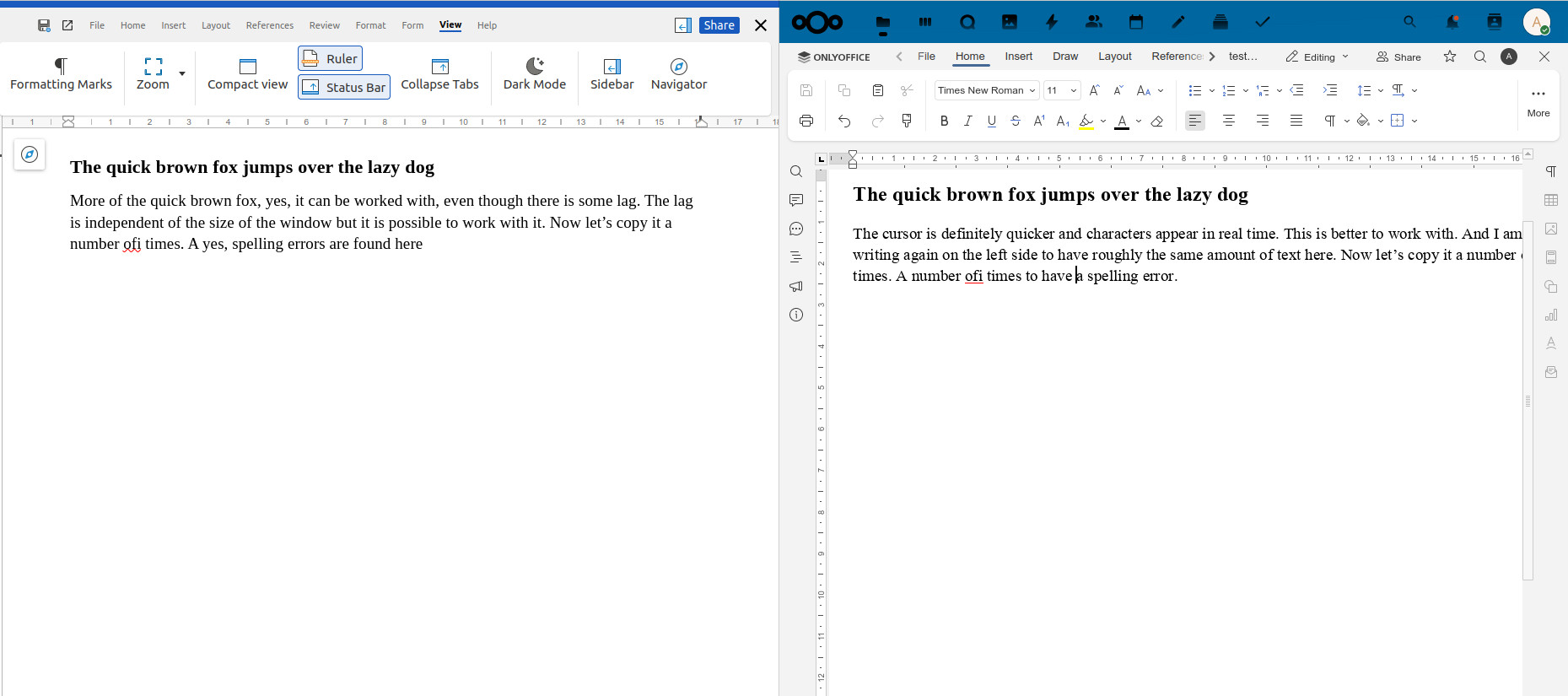

Two years ago, I switched from editing documents I store on my Nextcloud with Collabora (Libreoffice) Online to OnlyOffice Online. The main reason: The self-hosted Collabora/Libreoffice Online server renders changes made in documents on the server side and then downloads the result into the browser. The unfortunate consequence: There was a noticeable delay between typing something and characters appearing on the screen if one is not close to the server.

Also, I had some problems with characters sometimes not appearing in the order I had typed them. And finally, I sometimes got drawing errors where parts of a line were missing. All of this together made me set-up my own OnlyOffice document server and connect it to Nextcloud. I’ve been using this setup for 2 years now and it suffers from none of these issues, as the document to edit is loaded into the browser and rendered locally. Changes made to the document locally are then sent to the server and from there to other people editing a document collaboratively. From my point of view a much better architecture. But it’s two years down the road now, perhaps Collabora Online has become better!? I decided to take a look.

Continue reading Revisiting Collabora Online with Nextcloud – 2 Years Later