I’m quite amazed I am already into part 8 in this series of exploring the limits of using a headless workstation as a ‘power booster’ next to my notebook. The next thing I have taken a closer look at is the connection between the notebook and the workstation, a 1 Gbit/s Ethernet link. That’s good enough for just about anything I want to do except when transferring large files. And mind you, when I say large, I don’t mean a couple of 100 MBs or even a gigabyte, that just works fine over such a link. But when transferring, say 100 GB, it does become a bottleneck. So something faster is necessary. How hard can it be, I thought, and was quite surprised by the answer.

10GbE SFP+ is Nice, But Not With A Notebook

I quickly discounted 10GbE (Gigabit Ethernet) with SFP+ fiber modules, as the USB-3 ports of my Lenovo X250 notebook would not support such a speed anyway. And apart from that, there are no USB-3 to 10GbE over fiber adapters available anyway. So that has to wait until another day. But there is life between 1 and 10 GbE. There’s 2.5GbE over CAT6 copper cables, for example, and USB adapters for notebooks and PCIe cards for the workstation are readily available. At around 35 euros they are also in a good price/value range. 2.5GbE’s theoretical top speed is around 312 MB/s compared to the 125 MB/s of the standard 1GbE. More than what any hard drive I have can deliver but less than what my SSDs can do. But still, a worthwhile upgrade and it should be pretty painless. Or so I thought.

Let’s Go For 2.5GbE

So I ordered a 2.5GbE USB adapter and a PCIe card and got started. On the workstation side, things worked painlessly, the card is recognized by Ubuntu 20.04 straight away and announces its 2.5GbE capabilities in the system log. While the PCIe card uses a Realtek 8125 chip, my USB adapter contains a Realtek 8156 chip with a different Linux kernel driver. This one’s also included in Ubuntu 20.04 but spams the syslog with tens of messages a second about the connectivity status. It seems a known bug that is either fixed by disabling kernel logging to syslog or supposedly compiling the latest version of the driver and inserting it into the system. I stuck to the first option for the moment because re-compiling the kernel driver whenever the kernel is updated on my notebook is not quite what I had in mind.

The next issue I encountered was that at first the link rate was only 1GbE. After a bit of experimenting I found out that it’s not the driver and not the cable, it’s just that sometimes that’s the speed that is negotiated on the wire. Unplugging and plugging the cable in again fixes that. It does not happen very often but still often enough to feel uncomfortable.

iPerf3 Fun

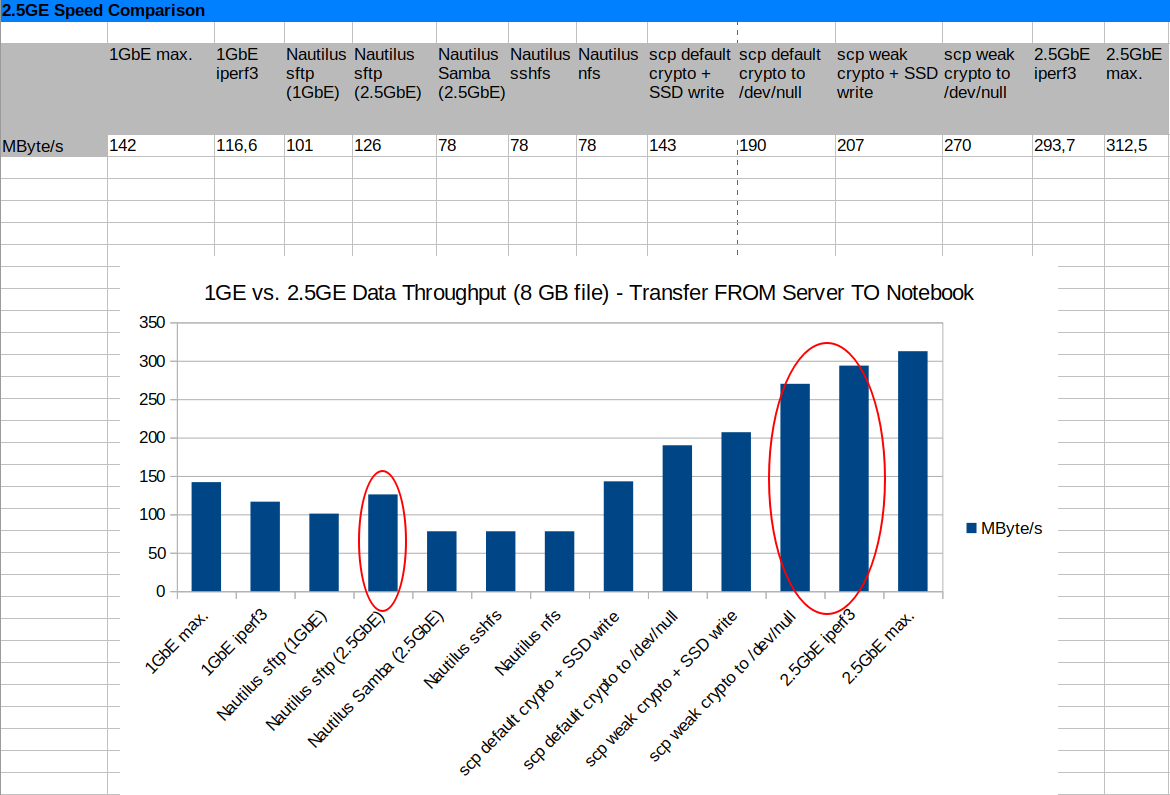

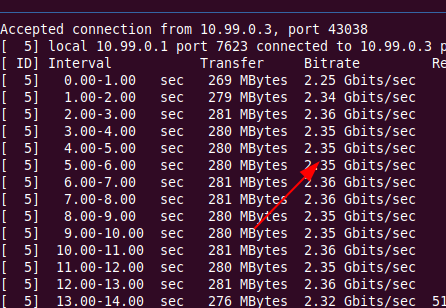

Next, I ran iperf3 over the link and was delighted to to get single TCP stream transfer rates of 2.36 Gbit/s. Now we are talking! So, let’s get to a real world scenario, using Ubuntu’s Nautilus file manager and transfer some files to and from an sftp share on the workstation. I did expect that the data rate would be somewhat lower as sftp uses encryption which is a heavy burden on the CPU. But I admit I was rather disappointed when my Nautilus/sftp data transfer speed only rose from 101 MByte/s over a 1GbE link to 126 MB over the 2GbE. Only a 25% increase instead of 250%? No, that can’t be right I thought, that wouldn’t be worth the effort. So I started to experiment.

Next, I ran iperf3 over the link and was delighted to to get single TCP stream transfer rates of 2.36 Gbit/s. Now we are talking! So, let’s get to a real world scenario, using Ubuntu’s Nautilus file manager and transfer some files to and from an sftp share on the workstation. I did expect that the data rate would be somewhat lower as sftp uses encryption which is a heavy burden on the CPU. But I admit I was rather disappointed when my Nautilus/sftp data transfer speed only rose from 101 MByte/s over a 1GbE link to 126 MB over the 2GbE. Only a 25% increase instead of 250%? No, that can’t be right I thought, that wouldn’t be worth the effort. So I started to experiment.

Options, Options, Options

So maybe the Nautilus/sftp combination is not ideal, so I tried a Samba/smb share with Nautilus. The result is even worse, 78 MB/s. I could do that over a 1GbE line. Next I used sshfs to mount the workstation drive on my notebook and then combined this with Nautilus. Again, only 78 MB/s. So maybe encryption is taking a far heavier toll than I anticipated? So I gave NFS a try that doesn’t use any encryption. From a security point of view that would work for me as I would only use it on a dedicated link between two machines. But again I could only reach around 78 MB/s. I started to become rather disappointed.

Then, somebody on the Internet suggested I should give the scp command line tool a try. It’s deprecated but supposed to be quite optimized for speed, especially with a weaker crypto setting. So I gave scp a try with the default settings and could immediately reach 143 MB/s. Much better than Samba, sshfs and nfs over the 2.5GbE link. I also noticed that writing to the SSD slowed down the overall process during a 10 GB file transfer. Sending all incoming data to /dev/null increased the data rate to 190 MB/s.

Slowly Crawling up the Throughput Curve

190 MB/s with scp to /dev/null or a RAM disk is already something but still nowhere near the 2.5GbE line rate and the 2.3 Gbit/s I measured with iperf3. So I gave that weak crypto setting (-c aes128-ctr) option a try. With that I could reach 207 MB/s when writing to an SSD and 270 MB/s when writing to /dev/null or a RAM disk. That’s very close to the 293 MB/s I could reach when using a single TCP stream with iperf3. That’s lots of numbers so here’s a chart that summarizes the result:

Writing To Disk

Note that even when using scp with a weak cipher, writing to disk slows down the overall operation by 70 MB/s. And that’s already with a SATA SSD involved to which the data is written at 500 MB/s. During the times data is written with this speed to the SSD, the data transfer over Ethernet comes to a halt. Ideally that shouldn’t be the case in a multi core system. However, that’s how my system behaves, even when I transfer data in the opposite direction and the workstation has to store the data on the SSD at some point.

Summary

So I am really a bit puzzled now. Scp with weak crypto clearly shows that the hardware of my notebook and workstation support much higher data transfer rates over 2.5GbE than over a standard 1GbE link. However, all other methods I tried fall utterly short to at least double the data transfer rate as I had hoped. In practice, that means that I will have to use the command line and scp for huge bulk data transfers. Yes, I can certainly do that but it leaves much to be desired and will significantly limit use in practice. If somebody has an idea how to get from the meager data rates of Nautilus+sftp in the red circle on the left to the datarates achieved with scp and iperf3 in the circle on the right, I’m all ears!