While the press and pretty much everybody focuses on ChatGPT these days, there’s another project of the same company that was recently open sourced that promises to create transcripts of video and audio files. It’s called Whisper AI. As I do get audio files every now and then for which a transcript would be helpful, I installed a local instance and gave it try.

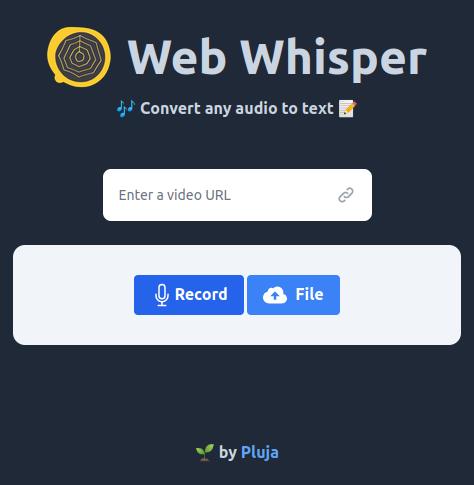

After reading the installation and usage descriptions on the project’s Github page, I thought that I’d probably be able to pull this off, but immediately wondered if there’s a dockerized version of it that could just be pulled and run. And indeed there are a number of them. After looking around a bit, I decided to go for Pluja’s web-whisper, as it doesn’t only offer a docker-compose script for easy installation, but also includes a nice and easy to use web-frontend as well.

Thanks to my prior experience with Docker and docker-compose, getting a private instance up and running wasn’t much of a problem. By default, the ‘small’ language model is installed, which takes only little storage. I’m not sure about the exact size of the docker images that are pulled, but by today’s storage capacities it’s not much and the download just took a couple of seconds. The containers are also pretty benign when it comes to RAM usage in a virtual machine. While running a transcription on a 30 minute audio file, memory usage never went above a couple of hundred megabytes. I was expecting much more.

The original documentation mentions that the transcription speed can be significantly increased using a GPU. As I ran my instance in a VM on a server in a data center that doesn’t even have a GPU, I couldn’t try this. Still, on my Ryzen 5 3600 based server in a data center, the file was transcoded at a speed of 1:3, i.e. the 30 minutes audio file took around 10 minutes to be transcoded with 4 cores assigned to the VM. Good enough for me.

So how did the result look like!?: When I read through the transcript, I have to admit that I was amazed as it was almost perfect. The only thing that was missing: Context! In the audio file, two people were having a conversation and in the text file it wasn’t evident who said what. Still, a very interesting tool for generating transcriptions locally, i.e. ideal for keeping private things private!