Recently I’ve upgraded my notebook to 16 GB of RAM to enable me to run more virtual machines in parallel and to prepare for a 32 to 64-bit OS upgrade. Unfortunately I discovered after a week or so that my disk write speed was deteriorating significantly over time. The more I looked the worse it seemed to get. What followed was a 7 day and night chase and 15+ terabytes of data transfers until I finally found the reason and a workaround.

Problem 1: Slow Backups

The first problem I detected was that my differential backups had suddenly become slow. Instead of an average throughput of 60 MB/s they were down to 20 MB/s. Rebooting the machine helped but I could watch the speed deteriorating from 60 to 20 MB/s even during the backup, typically after having copied the first 40 to 50 GB. Very strange. At first I thought it had something to do with my internal SSD which has given me trouble before but I soon found out that I could reproduce the same behavior by copying files between two external hard drives as well. By chance I noticed that write speeds suddenly improved when I started copying files on other drives simultaneously. An interesting behavior but it didn’t really help because write speeds didn’t increase every time I tried and the effect was only temporary. A few minutes and gigabytes later and write performance was slow again.

Problem 2: Deteriorating Write Performance to my Flash USB Stick

Next, I noticed that performance was equally bad when writing to a Flash USB stick. When I transferred 7 GB of data to the stick, the first 3 to 4 GB were written to the stick at 80 MB/s. But then performance suddenly dropped to 40 MB/s. I’ve never transferred that much data to the Flash USB stick so perhaps this is normal? No it’s not, after a reboot of the machine the full 7 GB were written at 80 MB/s. One day later and the problem was back.

So perhaps it’s a BIOS issue I thought and flashed the latest BIOS software version. I’m glad I have the latest BIOS version on my notebook now but unfortunately it didn’t change anything.

Problem 3: Very Slow Write Performance after Suspend/Resume

So perhaps this is caused by system suspend and resumes? A nice theory but it had nothing to do with that either, I could reproduce the effect even after rebooting the machine. But unfortunately I found something even worse. Sometimes after suspend and resume, but not always, writing to the system partition was very slow, 1 MB/s or less. I first noticed this when programs after suspend/resume behaved sluggishly. Examples are Firefox or Gedit that write quite a lot of data to a temporary location on my system partition on startup and exit. The strange thing about this behavior was that after a minute or two the effect was suddenly gone and write speeds were back to normal. Even stranger was the effect that this only impacted the system partition. The data partition on the same SSD could always be accessed at full speed at the same time. To me that was the first clue that it was not a hardware problem!

Problem 4: 16 GB RAM Make It Worse

And finally, I found out that the effect also depends on the amount of RAM in my notebook. With 16 GB of RAM I could reproduce the issues after copying data for only a few minutes and after around 50 GB of data copied. With 8 GB of RAM, it could take hours and 700 GB of data transferred until the effect appeared. I removed and put the RAM back into the notebook several times to confirm that there was a dependency.

The Reason Behind Most Of It – A Kernel Bug

At this point in time I wasn’t sure what I was dealing with, is there just one issue causing this or are there several issues that need to be treated separately? While copying all those terabytes of data I started looking for reports of similar issues and after a while found two reports which included all my 3 symptoms (see here and here): Slow write speeds after a while, slow write speeds after suspend/resume that suddenly improve and a strong dependency of the effect on the amount of memory in the system. The fix that was proposed was to clear the file cache in memory with the following commands :

sudo sync; echo 3 | sudo tee /proc/sys/vm/drop_caches

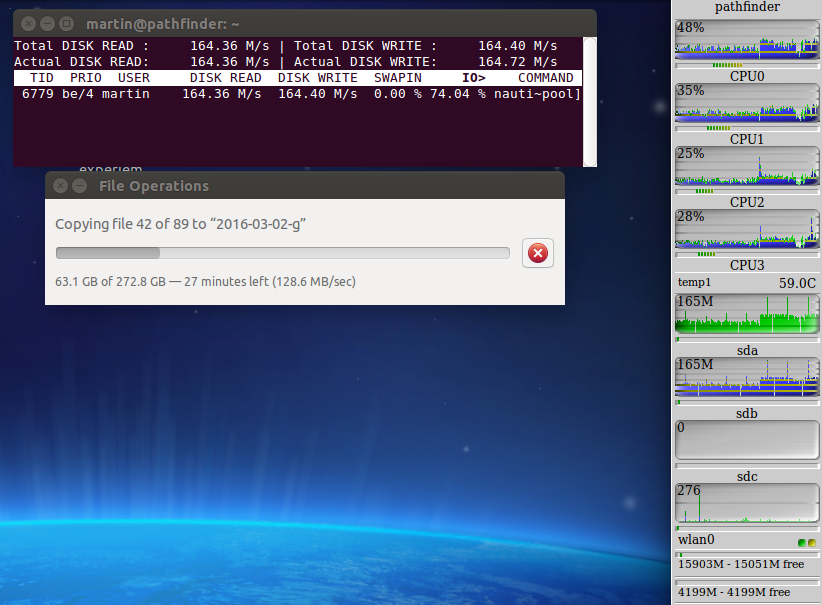

Could it be that easy? When giving it a try during copying 270 GB of data I had a hard time getting that grin of my face once I saw the result: After write throughput had deteriorated significantly over time from 170 MB/s down to 100 MB/s, the command above immediately restored performance. All was ok for a several gigabytes of copied data afterwards before things were starting to slow down again. Issuing the command again brought back full performance immediately. The big screenshot above shows how the restoration effect looks like in practice. Have a look at the throughput in the ‘sda’ and ‘sdb’ graphs and how it suddenly jumps up in the middle of the graph. This is when I issued the command above.

So it seems that for my hardware configuration the Linux kernel has a problem with its file cache in RAM that gets worse the more memory is installed in the system. Unfortunately, there seems to be no real fix for this so the command has to be issued repeatedly during long file transfer operations. I thus wrote a script that triggers the command in an endless loop every 30 seconds. A crude hack but it fixes the problem.

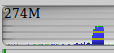

The command above also fixes the very slow write performance issue after suspend/resume as shown in the seconds screenshot on the left, at least most of the time. The graph shows how write performance looked like after resume in about the middle of the graph (< 1 MB/s) and how it suddenly improved when issuing the command above (274 MB/s). As a consequence, the second crude fix was to put the command into a script file in /etc/pm/power.d so it gets executed every time the machine resumes from suspend. Not pretty but it gets the job done. Occasionally it doesn’t help and write speed to all partitions remain low for a while after resume despite the command, so there’s yet one more thing to discover.

The command above also fixes the very slow write performance issue after suspend/resume as shown in the seconds screenshot on the left, at least most of the time. The graph shows how write performance looked like after resume in about the middle of the graph (< 1 MB/s) and how it suddenly improved when issuing the command above (274 MB/s). As a consequence, the second crude fix was to put the command into a script file in /etc/pm/power.d so it gets executed every time the machine resumes from suspend. Not pretty but it gets the job done. Occasionally it doesn’t help and write speed to all partitions remain low for a while after resume despite the command, so there’s yet one more thing to discover.

As I’m using a 2 year old kernel that came with Ubuntu 14.04 (3.13.0.x) the next step would be to find out if this has been fixed in the meantime. I’m a bit skeptical as I couldn’t find any indication on the net that someone has fixed it. Or perhaps, as it was suggested in the error reports I have linked above, this issue only appears with a 32-bit kernel and things are fine after upgrading to 64-bit!? I’m tempted to investigate but after spending so much time and having a crude but working fix for the moment, I’ll hold off until Ubuntu 16.04 LTS is released which is when I want to update anyway.

Until then…