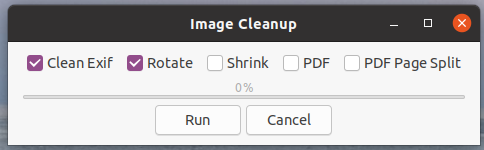

Back in summer I spent some time working on a Nautilus file manager plugin to automate image rotation, shrinking, removing exif information and PDF’ing the resulting images into a single file. I have been using it quite a lot ever since and especially when treating a high number of files, say 1000 images at a time, the process can take 15-20 minutes easily. At the same time, I have noticed that my 4 thread CPU is not fully utilized as all processing is done sequentially. So I had a look how to run several things simultaneously to speed things up.

The challenge with parallel multitasking in Python is that the runtime was not designed for this. To make things simple with memory management, the Global Interpreter Lock (GIL) ensures that even if several threads are started in a Python program, only one would run at a time. In other words, that mechanism was rather useless for me. Fortunately, the ‘multiprocessing’ Python library offers the solution as it spawns independent processes that can then perform work individually. This comes at the price of not being able to access the memory of the main thread and other things. However, for my application that treats image files this is not an issue as each operation is independent from any other operation. Inter-process communication is possible with the library and also using a common shared memory area but I made no use of that functionality. But other things in the ‘multiprocessing’ library came in very handy: In effect, the API allows to create a worker pool for which the number of concurrent processes can be specified. That’s done with a single command:

self.p = multiprocessing.Pool(multiprocessing.cpu_count())

The pool can then be given work by calling another API function in which a function address and parameter for the functions are given. If you have 500 things to be done, you give call the apply function 500 times at once and then let the library do its thing:

for file in file_list:

self.p.apply_async(worker_function,

args=(self.dir_name,file),

callback=callback_worker_finished)

Once done you can block the current thread until all workers are finished:

# All work is in the pipeline, now wait for completion # and then remove the worker pool resources, threads and processes again self.p.close() self.p.join() self.p = None

By using a callback function the main process can be informed when a worker has finished and by using traditional threading you can wait for events outside the worker pool such as the user clicking on a ‘cancel’ button in a dialog box to terminate all outstanding work that is still in the queue with a call to terminate(). Perfect!

If you want to take a closer look, search for ‘_runParallelTasks‘ in the source of my plug-in on Codeberg.

The resulting speed-up is quite rewarding: Running all operations on 1000 images required around 20 minutes in the single thread version. With the multiprocessing version, the same operations finished in just around 13 minutes!