In part 4 of this series, I’ve compared the TCP behavior of high speed LTE and Wi-Fi to a virtual machine in a dater center over links with round trip delay times of around 25 ms. The results were very insightful but also raised new questions that I will follow up in this post. So here’s a quick recap of what happened so far to set things into context:

For fast Wi-Fi, I went to the local university in Cologne that has an excellent Eduroam Wi-Fi deployed in many areas. There, I was able to get an iperf uplink throughput of around 500 Mbps to a virtual machine in Nürnberg. When analyzing the TCP behavior in more detail, I noticed that my notebook quite frequently hit the default maximum TCP receive window size of 3 MB of the VM in the remote data center. In other words: More would perhaps have been possible with a bigger TCP receive buffer. So I gave this another try to see what would happen.

In a local area network environment with a round trip delay time of just 2 to 3 milliseconds, a 3 MB TCP receive window is more than enough. When doing the math with the bandwidth delay product, the maximum round trip delay time (RTD) that can be supported with such a receive window is around 50 milliseconds. So while my server had an RTD of around 25 ms while data was transferred, one could see in the trace that quite often, this increased to 50 ms, which frequently lead to transmission stalls (see the previous post). So I increased the TCP receive window size on the virtual machine in the Nürnberg data center to 12 MB, which results in an advertised TCP rwin of 6 MB during a transmission. I have no idea why only half that value is advertised, but its a consistent behavior. Here’s the command to change the rwin in Linux:

# Set to 12 MB rwin, which gives 6 MB (for whatever reason) on the line:

echo "4096 131072 12291456" > /proc/sys/net/ipv4/tcp_rmem

# Set to original value again

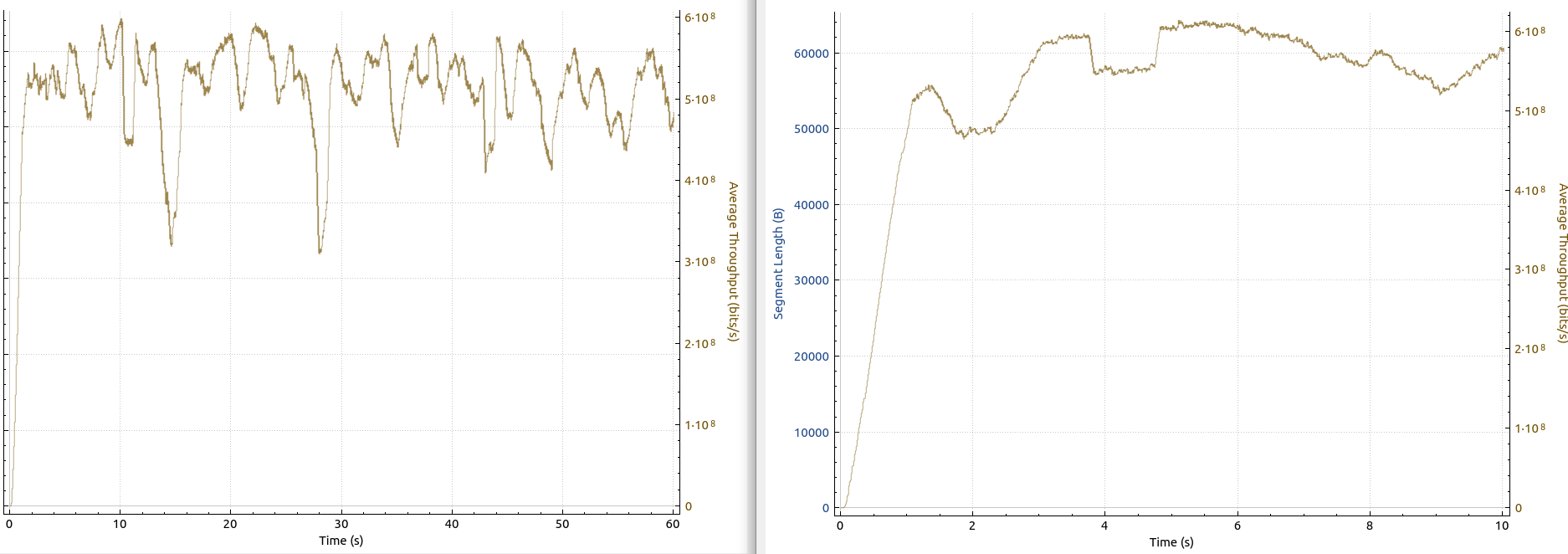

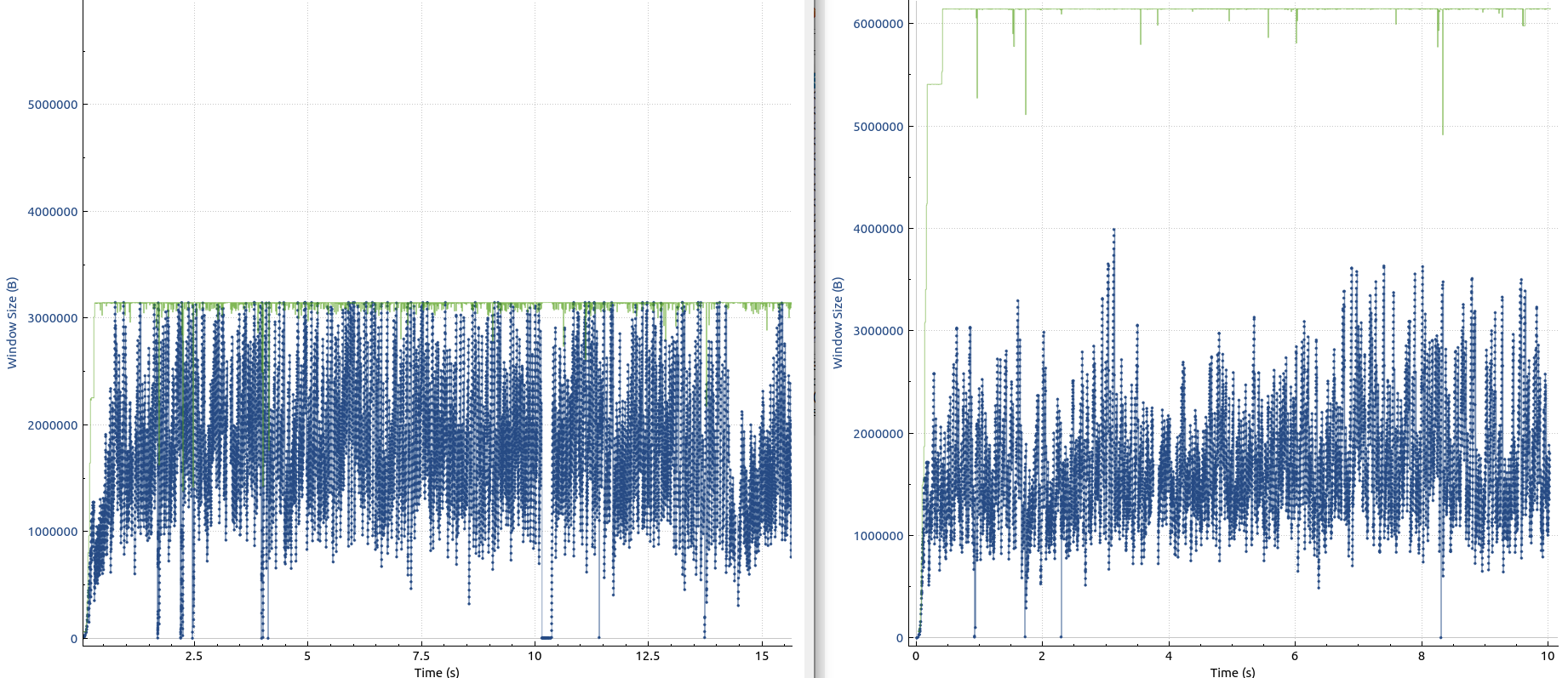

echo "4096 131072 6291456" > /proc/sys/net/ipv4/tcp_rmemI then ran some tests with the 3 MB and 6 MB receive window size and while I could see some improvement, it was only around 50 Mbps. Not quite what I was hoping for. The first image above shows the throughput comparison with a 3 MB receive window on the left and 6 MB receive window on the right. The image below shows how the bytes in flight quite often touch the 3 MB receive window while on the right, the bytes in flight comfortably remain well below the green receive window size.

So even though the receive window situation significantly improved, the throughput didn’t change very much. So why is that? It turns out, that by chance, the Wi-Fi just about supports around 550 Mbps. Here are the physical channel parameters that were used:

~$ iwconfig

wlp3s0 IEEE 802.11 ESSID:"eduroam"

Mode:Managed Frequency:5.22 GHz Access Point: xx:xx:xx:xx:xx

Bit Rate=866.7 Mb/s Tx-Power=22 dBm

Retry short limit:7 RTS thr:off Fragment thr:off

Power Management:on

Link Quality=59/70 Signal level=-51 dBm~$ sudo iw wlp3s0 station dump

inactive time: 360 ms

[...]

signal: -51 [-51, -52] dBm

signal avg: -53 dBm

beacon signal avg: -51 dBm

tx bitrate: 866.7 MBit/s VHT-MCS 9 80MHz short GI VHT-NSS 2

rx bitrate: 866.7 MBit/s VHT-MCS 9 80MHz short GI VHT-NSS 2

authorized: yes

authenticated: yes

associated: yes

preamble: long

WMM/WME: yes

MFP: no

TDLS peer: no

DTIM period: 1

beacon interval:100

short slot time:yes

~$ sudo iw dev wlp3s0 scan | grep -A 150 associated | grep "count\|utili"

* station count: 19

* channel utilisation: 20/255Ok, so we have an 80 MHz 802.11ac channel, good signal conditions, and little traffic (channel utilization). This results into a physical layer speed of 866.7 Mbps, which translates into the about 600-650 Mbps on the IP layer. Makes sense!

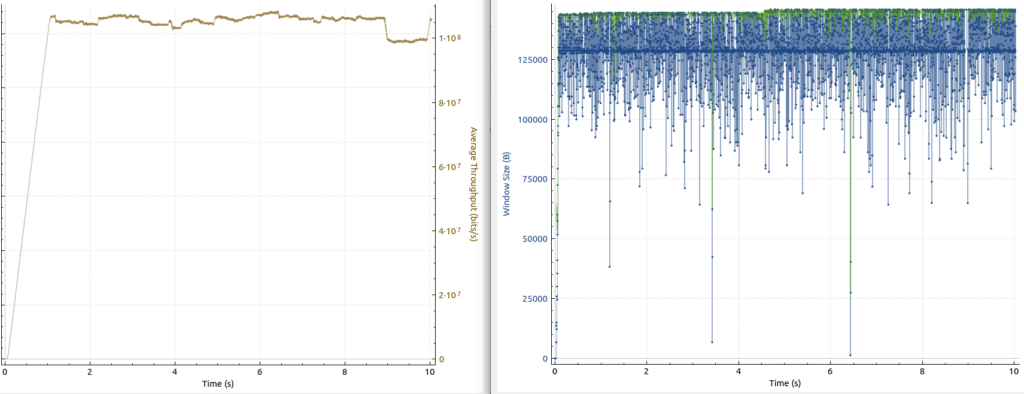

I didn’t want to go home without seeing the effect of a TCP receive window that is too small, so I decreased it to just 128KB on the VM in the data center. The final image in the post below shows the devastating effect of such a misconfiguration. The throughput graph, shown on the left, shows a meager 100 Mbps, and the graph on the right show that the bytes in flight permanently touch the maximum TCP receive window size, which stalls the data transfer.

So far so good. In the next episode, I’ll have a look at how throughput looks like between two servers in different data centers with a similar latency in between, but no Wi-Fi of course. So stay tuned!