So here we go: After the interesting but somewhat slow Starlink uplink throughput results discussed in part 5, let’s have a look if the TCP BBR congestion avoidance algorithm can improve the situation. BBR is not configured out of the box, but I use it on all of my servers and workstations. BBR could be particularly helpful for uplink transmissions, as it is a sender side measure. Thus, it doesn’t matter which TCP algorithm is used on the receiver side, which, for this test, was a box in a data center .

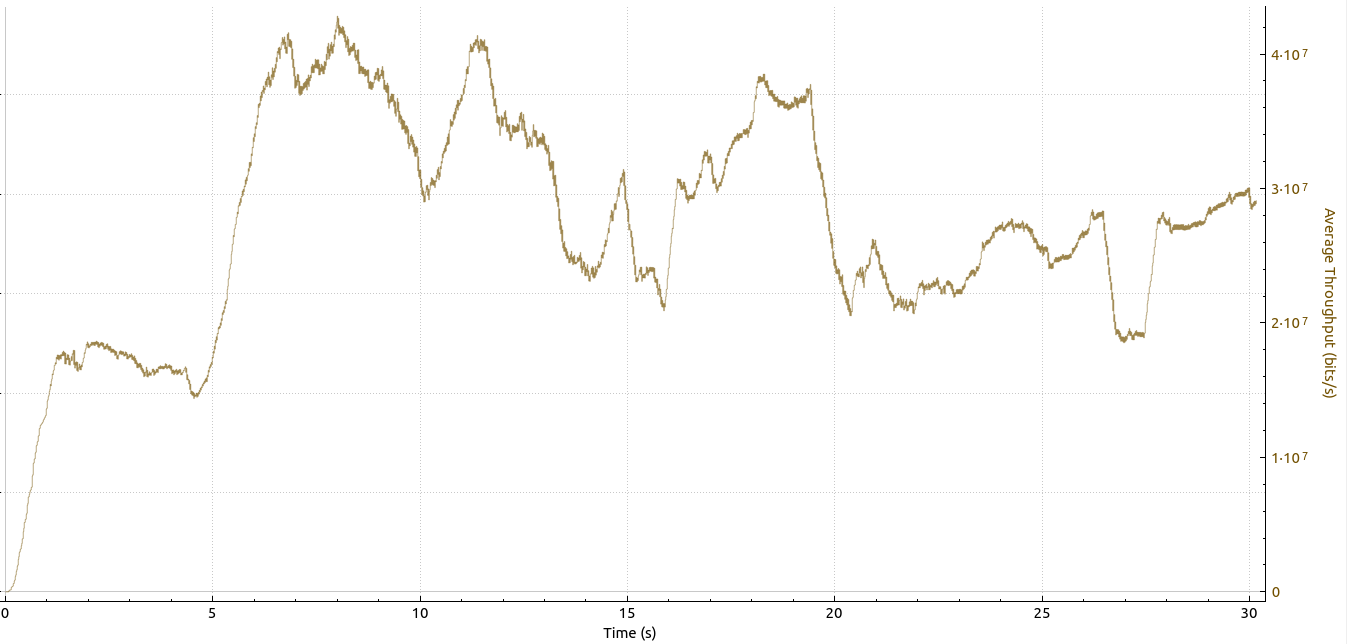

The Wireshark throughput graph shown above shows that throughput with BBR is significantly higher than with Cubic. In this uplink run, average throughput was well over 25 Mbps, while the uplink run in the previous post with Cubic only averaged in the 5-6 Mbps range. Let’s have a look at Wireshark’s tcptrace sequence chart that shows packet losses with red dots/lines:

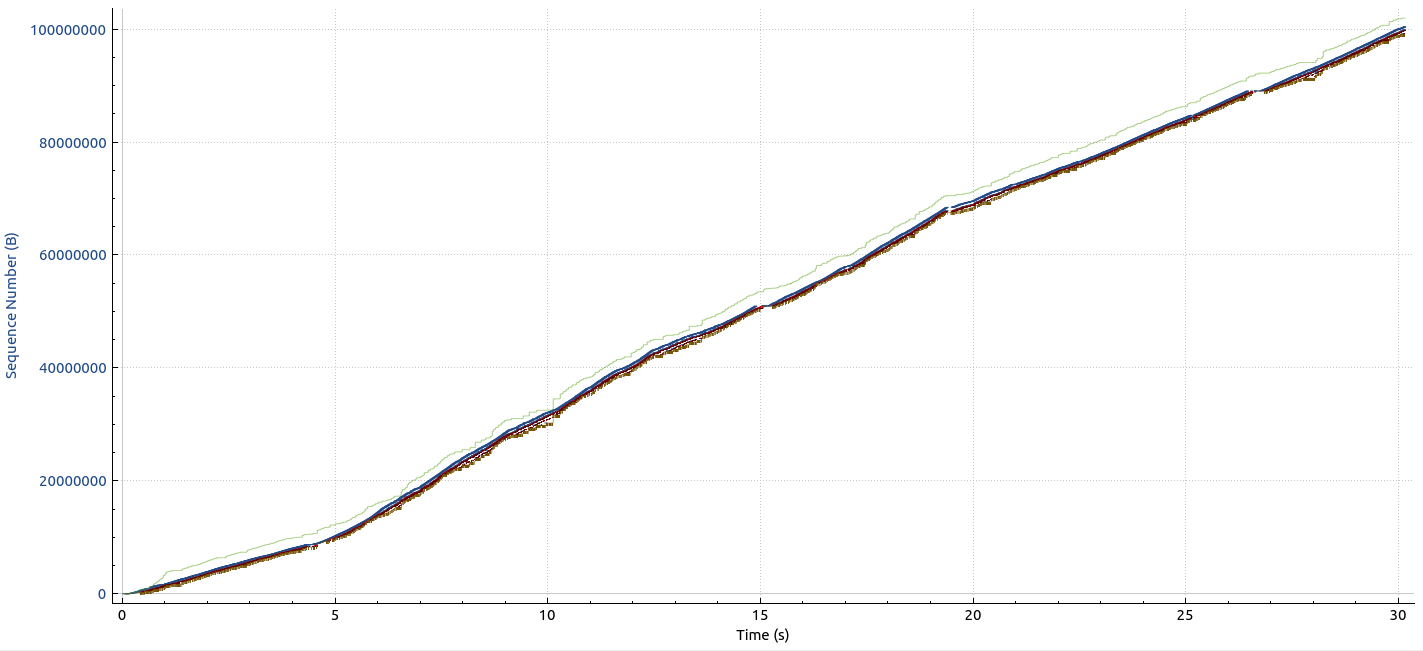

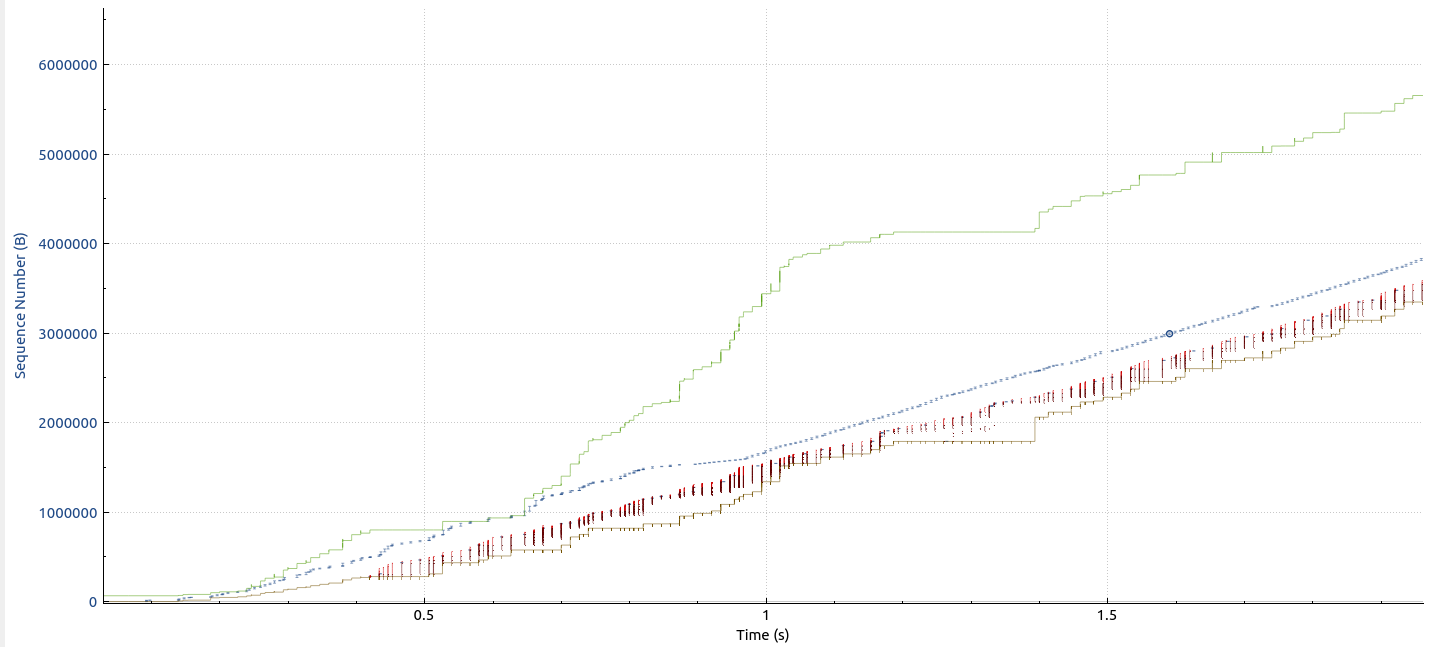

Right click on the image, open it in a new tab and zoom to 100%. BBR really pushes the link, there’s packet loss all across the transfer. A total carnage! Here’s a graph of the first few seconds of the transmission:

There are an endless number of TCP Duplicate Acks and TCP ‘Out of Order’ transmissions, so I didn’t even bother to count them. But that’s BBR, it doesn’t care at all about missing packets, it focuses on other values to determine the line rate. Amazing!

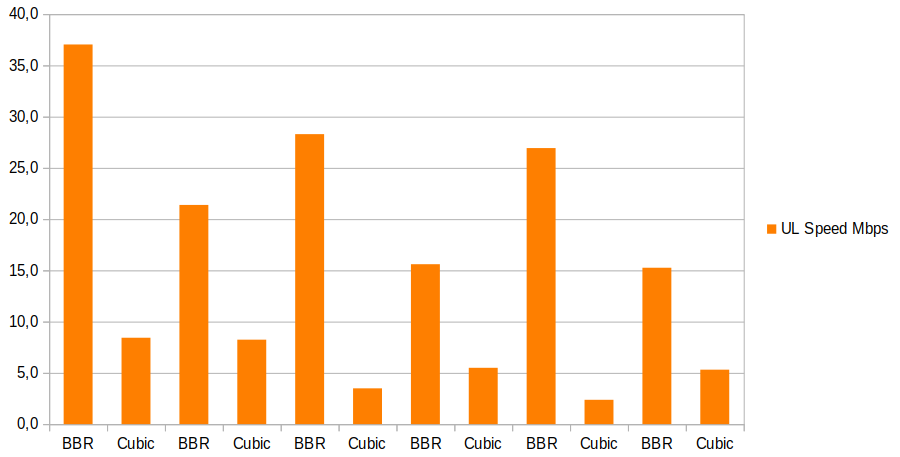

So perhaps this 5 fold increase over TCP cubic is just a one-off thing? It’s not, as is clearly shown in the following chart, that shows 30 seconds uplink tests alternately done with TCP BBR and TCP Cubic. In each case, BBR was significantly better than Cubic over the link.

Wow, I didn’t expect this carnage and even less the significantly improved throughput. Totally mind boggling. Which of course leads me straight to the next question: How does Windows perform in the uplink direction? Here, no BBR is available. I guess this will require a separate post. To be continued.