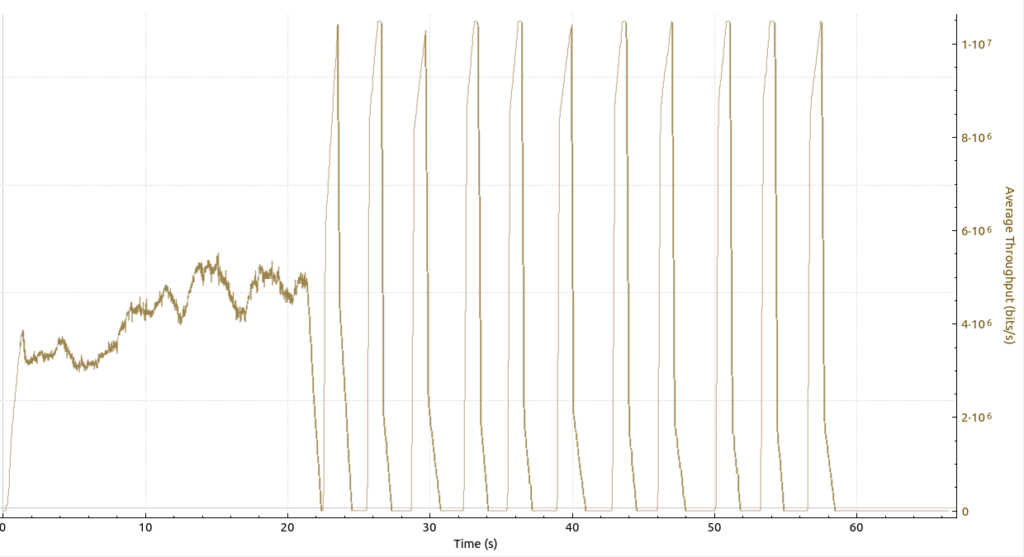

Wi-Fi Tethering on Android is a great thing and has totally changed the way we use the mobile Internet today. One thing Android does not do well today at all, however, are bulk uploads over Wi-Fi tethering, especially under bad LTE radio conditions with low throughput. The screenshot above shows how a big file upload looks like over the course of one minute. Up to 22 seconds into the transmission, the throughput graph looks quite reasonable, throughput is around 4 Mbps in the uplink direction. But at 22 seconds into the upload, the curve suddenly goes crazy. Average throughput stays at 4 Mbps, but things could not be more wrong. Let’s have a closer look why that is so and what can be done about it.

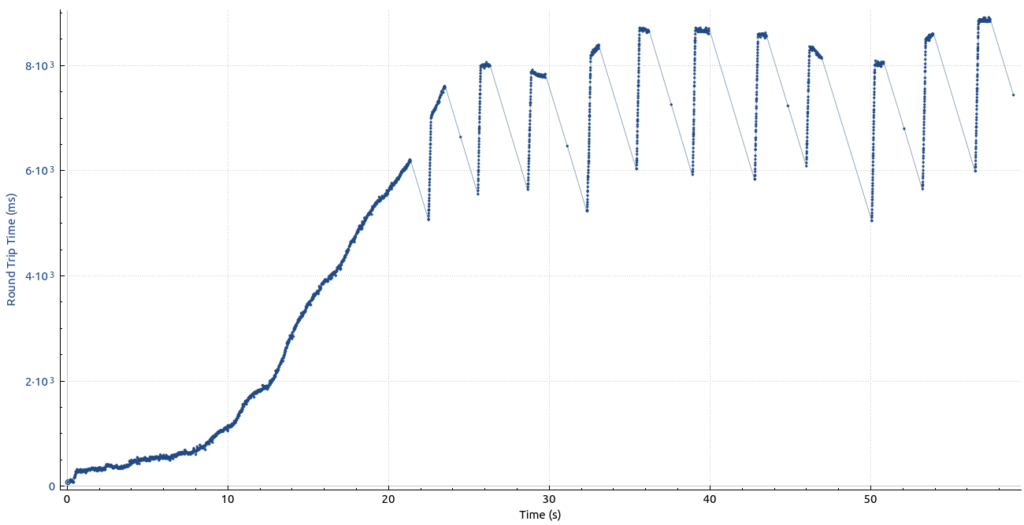

If the throughput stays at 4 Mbps, why would I care how the throughput graph looks like? Let’s have a look at the round trip delay time during the upload:

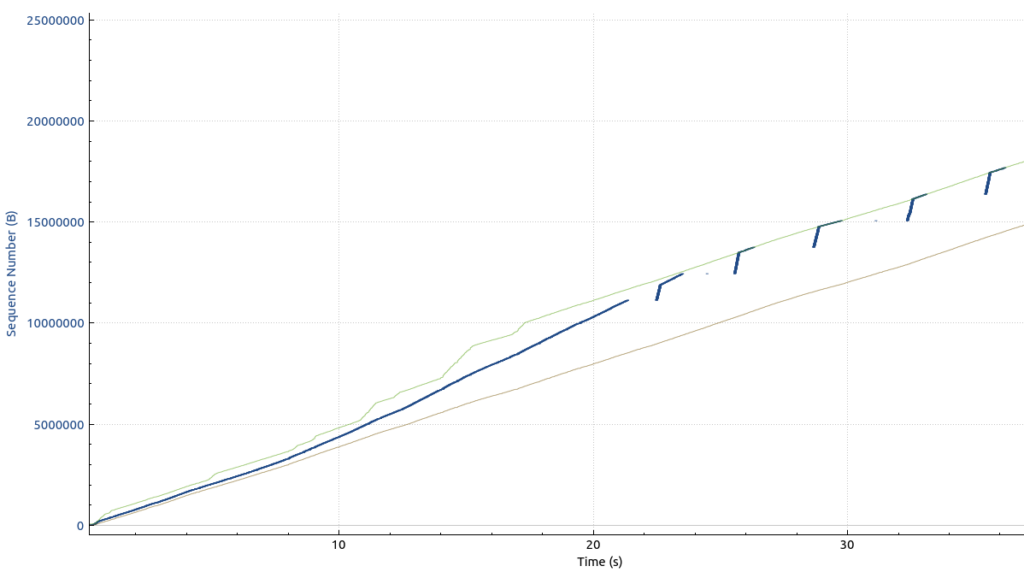

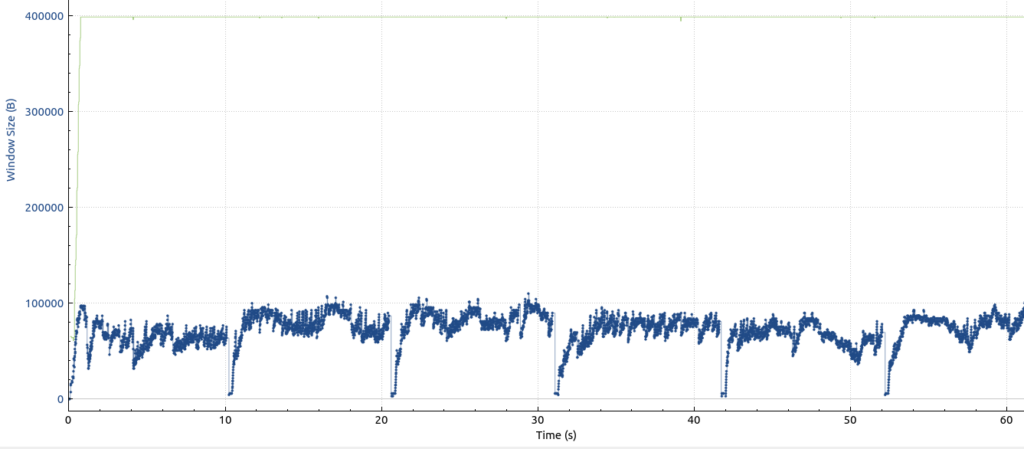

Until around 10 seconds into the transfer, the round trip delay time is below 100 ms, so the overall link remains quite usable for other applications like web surfing or IP based voice calls. But beyond that, round trip delays quickly rise, as Android buffers more and more IP packets in its uplink buffer. Basically, after the first 10 seconds, it’s not possible anymore to do anything else over the link, as Android’s uplink buffer is too large and there is so much data waiting to be sent. Forget about web surfing, pages will just not load in any reasonable amount of time. Also, the delay is just too great for IP based conference and voice calls that use the pipe. So what exactly happens at 22 seconds? A look a the TCP sequence number graph clearly shows what is going on:

Up to 22 seconds, the blue line that represents the number of bytes in flight remains below the green line, which represents the maximum receive buffer size. At the 22 second mark, the delay of the TCP acknowledgement become so large that my notebook had to stop the transmission and wait for the TCP acknowledgements. They seem to come in as a burst, which then triggers a fast transmission of more data up to the maximum receiver buffer size. Then the notebook has to wait for the acknowledgements again.

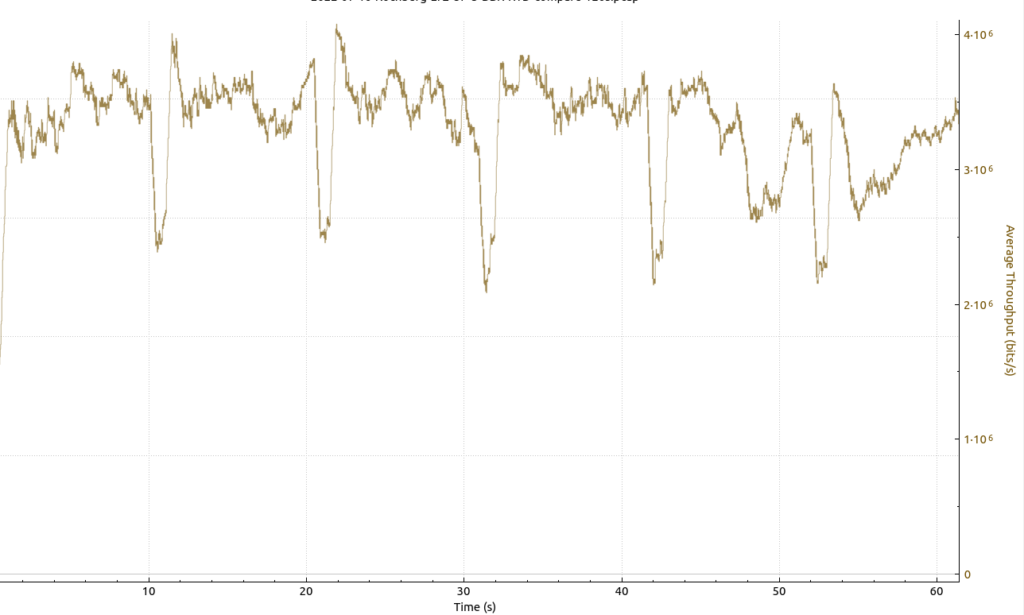

The best way to fix this would be for Google to introduce anti-buffer bloat algorithms in Android. I don’t see that anywhere on the horizon, though. But fortunately, there are two other ways to fix this: The first method is to use a router before the Android device with an intelligent anti-buffer bloat mechanism. That requires a bit of hardware, though. The second method is to use a different TCP congestion control algorithm in the sending device. In Linux, one can conveniently switch from the default ‘Cubic’ to the much more advanced ‘BBR’ algorithm. With BBR, the link behavior looks completely different. Here’s a screenshot of the throughput graph with BBR instead of Cubic on the same link:

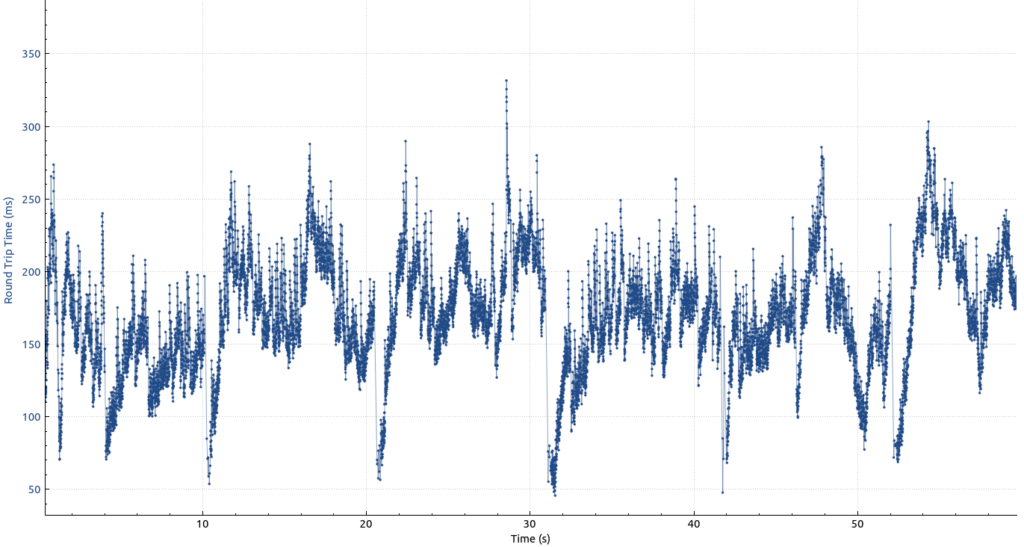

The graph looks great and here’s the round trip delay time during the transmission:

While there is a bit of variance, BBR tries to keep the round trip delay time below 200 milliseconds. That keeps the transmission stable and the bytes in flight are well below the TCP receive window limit on the other side:

Yes, this looks nice as well! And while 150-200 ms round trip delay time will not win any prizes, web browsing and voice applications will remain usable during the bulk uplink data transfer!