After recently upgrading my VDSL line at home, it was also time to upgrade my Wi-Fi equipment to support the higher speeds. When doing some speed tests after the upgrade I noticed to my satisfaction that my Wi-Fi connectivity now easily supports the 50 MBit/s VDSL line. I also noticed, however that downloading a file from a local web server at 100 MBit/s takes a heavy toll on my CPU, it’s almost completely maxed out. At first I suspected the Wi-Fi driver to cause the high load but I soon found out that Firefox is the actual culprit.

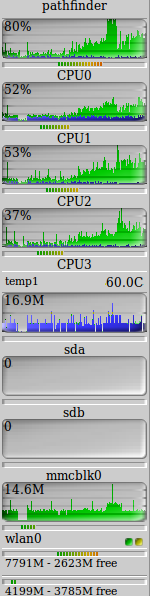

The picture on the left shows the story. When using SCP to download the file at around 100 MBit/s (see the wlan0 graph) the CPU loads shown in the top four graphs are quite low, around 10%. That’s what I would expect. Starting in the middle of the graphs the SCP file transmission came to an end and I started to download the same file via Firefox from the same server while the SCP transmission is ongoing, which is the reason why there is no transmission gap between the two transfers. A quite significant change happens as soon as the Firefox HTTPS file download starts, however, as CPU usage goes through the roof. On average all CPU cores are at 50% load while Firefox transfers the file.

The picture on the left shows the story. When using SCP to download the file at around 100 MBit/s (see the wlan0 graph) the CPU loads shown in the top four graphs are quite low, around 10%. That’s what I would expect. Starting in the middle of the graphs the SCP file transmission came to an end and I started to download the same file via Firefox from the same server while the SCP transmission is ongoing, which is the reason why there is no transmission gap between the two transfers. A quite significant change happens as soon as the Firefox HTTPS file download starts, however, as CPU usage goes through the roof. On average all CPU cores are at 50% load while Firefox transfers the file.

From this observation it is obvious that it’s not the Wi-Fi driver that is very inefficient but rather something in Firefox. Perhaps it’s the encrypted HTTPS transmission that requires additional CPU resources even though that’s unlikely as SCP uses an encrypted SSH tunnel and thus also has to decrypt the incoming data stream. To cross check I used WGET to run the same test again, this time over HTTPS instead of SCP’s SSH tunnel. Like with SCP the CPU load only went up to at around 10%. In other words, it’s Firefox that is creating this incredible overhead.

The takeaway from this is that for testing high speed network throughput, file downloads with Firefox are not the way to go anymore until they tidy up their code base in that area.