In the first part of this “Real World Performance” blog series, I’ve been looking at the performance of a 2 TB Crucial P2 NVMe SSD I installed in my Lenovo X13 notebook. While it did it’s job well, I was rather underwhelmed with performance. So after a few weeks, I replaced that drive with a 2 TB Samsung 970 Evo Plus NVMe SSD. As the specified write capabilities of that drive goes far beyond the speed I could read data from any other drive I have for testing, I set up a RAM disk with a huge file on it in the second part of this series. So here’s part 3 with my performance results for the Samsung 970 Evo Plus in combination with my X13 notebook when reading from RAM disk, and performance results for my real world scenarios:

The Non-Real Life Scenario: Copying From RAM Disk

When copying huge files from RAM disk to the SSD, I get a data transfer rate of around 1 GB/s. This is about the same speed as I got on the Crucial drive, except that there is no slowdown whatsoever after the fast flash memory on the drive becomes full. No matter how many hundreds of gigabytes of data I wrote to the drive, the speed stayed at 1 GB/s. Also, it didn’t matter if I wrote to a LUKS encrypted or plain partition on the drive. So that’s good to know, LUKS doesn’t slow me down in this scenario. CPU load, however, is quite different.

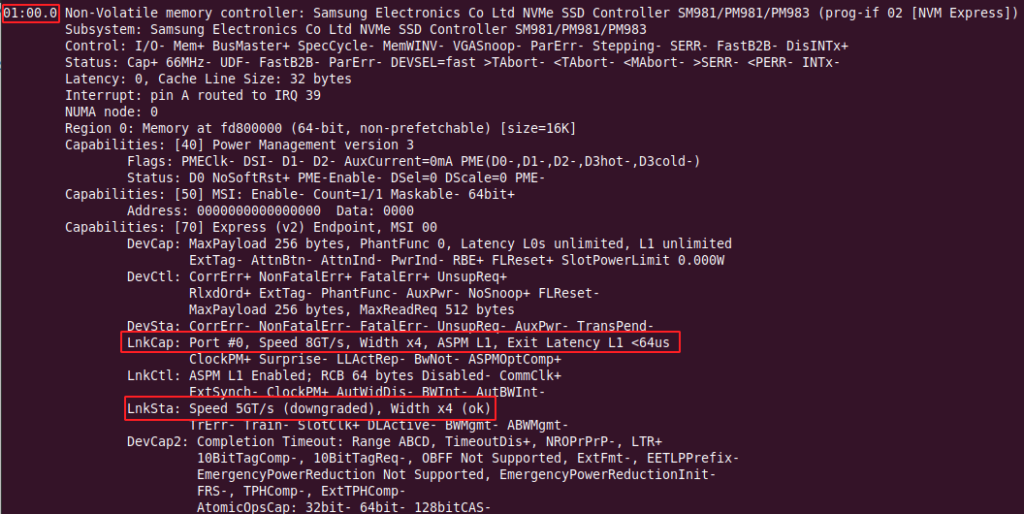

Still, 1 GB/s is far from the advertised throughput of 3 GB/s until the fast flash cells are full. So I ran several copy commands simultaneously to see if a deeper write queue depth would change things. And indeed, if more than just a single CPU core works on shuffling data to the SSD, the write speed increased to around 1.6 GB/s with at least 3 simultaneous copy commands running. When copying 5 files simultaneously, overall CPU load goes to around 50% as 5 of the 8 cores of the CPU become very busy. O.k., that’s nice, but still far away from the 3 GB/s that others are seeing in their tests. So what could be the reason for that? After a bit of searching I found out that the the PCIe bus to the device runs in PCIe v2 mode instead of the faster PCIe v3 mode that is supported by the SSD and, according to the specs, also by the notebook. Here’s what lspci had to say:

sudo lspci -vvs 01:00.0

As the drive has four PCI Express lanes, driving them each at 500 MB/s (v2) instead of 985 MB/s (v3) would very well explain this. Have a look on Wikipedia for the details. I had a look in the BIOS but there was no option to change the PCIe bus speed to the drive. Miraculously, when I had another look again a few days and a reboot or two later, the link was no longer downgraded. So I gave it another try. Copying 4 files from the RAM disk simultaneously to a non-encrypted partition still resulted in a speed of 1.6 GB/s. So while this leaves me a bit puzzled, it is still good enough for me because what really counts is that the drive can sustain this speed even when I occasionally copy several hundred gigabytes of data at a time.

Performance When Consolidating Virtual Machine Images

So the next question was how much speed I could get out of this setup in various real live scenarios in which a lot of data but no RAM disk is involved. One of the most data intensive tasks I run occasionally is to consolidate Virtualbox VM disk images. These typically have file sizes of 50+ GB. Removing snapshots that are no longer required means to read these files, throw away unused snapshot data and write the result to a new file. On my X13, this runs at around 500 MB/s now. Obviously, that’s far slower than the speed I can reach with a RAM disk and without much computation happening in the background. Compared to my previous notebook with an older CPU and a SATA SSD, however, the performance gain is quite impressive.

Transferring Virtual Machine Images

Another task that involves huge files is transferring virtual machine images from my workstation PC to my X13 notebook. For the purpose, I use a 2.5 GbE interface between the two, and the X13 is able to push around 200 MB/s over the interface over an ssh/sftp connection. And indeed, the SSD has obviously no problem to store data at this speed. Again, this is twice as fast as with the Crucial P2 NVMe I used before that slows down to 100 MB/s after a while and also a lot faster than what I used to get with my previous notebook that couldn’t get 200 MB/s of the 2.5 GbE interface. Yes, 200 MB/s is not the limit of GbE, but it’s the limit of what ssh/sftp can do from a CPU point of view in my setup.

Program Startup and Concurrency

Compared to the previous NVMe drive and to the previous Lenovo X250 notebook with a SATA SSD, I also noticed that latency has again reduced quite a bit. Libreoffice, for example, opens so fast now, that the splash screen can hardly be seen. When clicking on the program icon, the Writer window just opens in the blink of an eye. This is also still the case while I consolidate VM images or while I run backups over Ethernet or to a USB attached hard disk. There’s always a few CPU cores left waiting to work on other things in addition to shuffling data back and forth, and the SSD has no problems reading and writing data while other bulk data transfers are ongoing.

The Bottom Line

So in summary it’s nice that the X13 and the Samsung 970 Evo Plus support PCIe 3, but I’m unable to leverage the full write performance that the drive offers. That being said, the speed is still breathtaking, but it sets reviews that look at SSD performance in another perspective. The main takeaway for me: The sustained write performance and latency of the drive are the most important parameters for my daily use. And here, the 970 Evo Plus runs circles around my initial NVMe drive.