In part 3 of this series, I’ve taken a look Starlink’s downlink performance with the non-standard TCP BBR congestion avoidance algorithm. Overall, I was quite happy with the result as, despite the variable channel and quite some packet loss, BBR kept overall throughput quite high. Cubic, the standard TCP congestion avoidance algorithm, is not quite as lenient on packet loss, so I was anxious to see how the system behaves in the default Linux TCP configuration. And it’s not pretty I’m afraid.

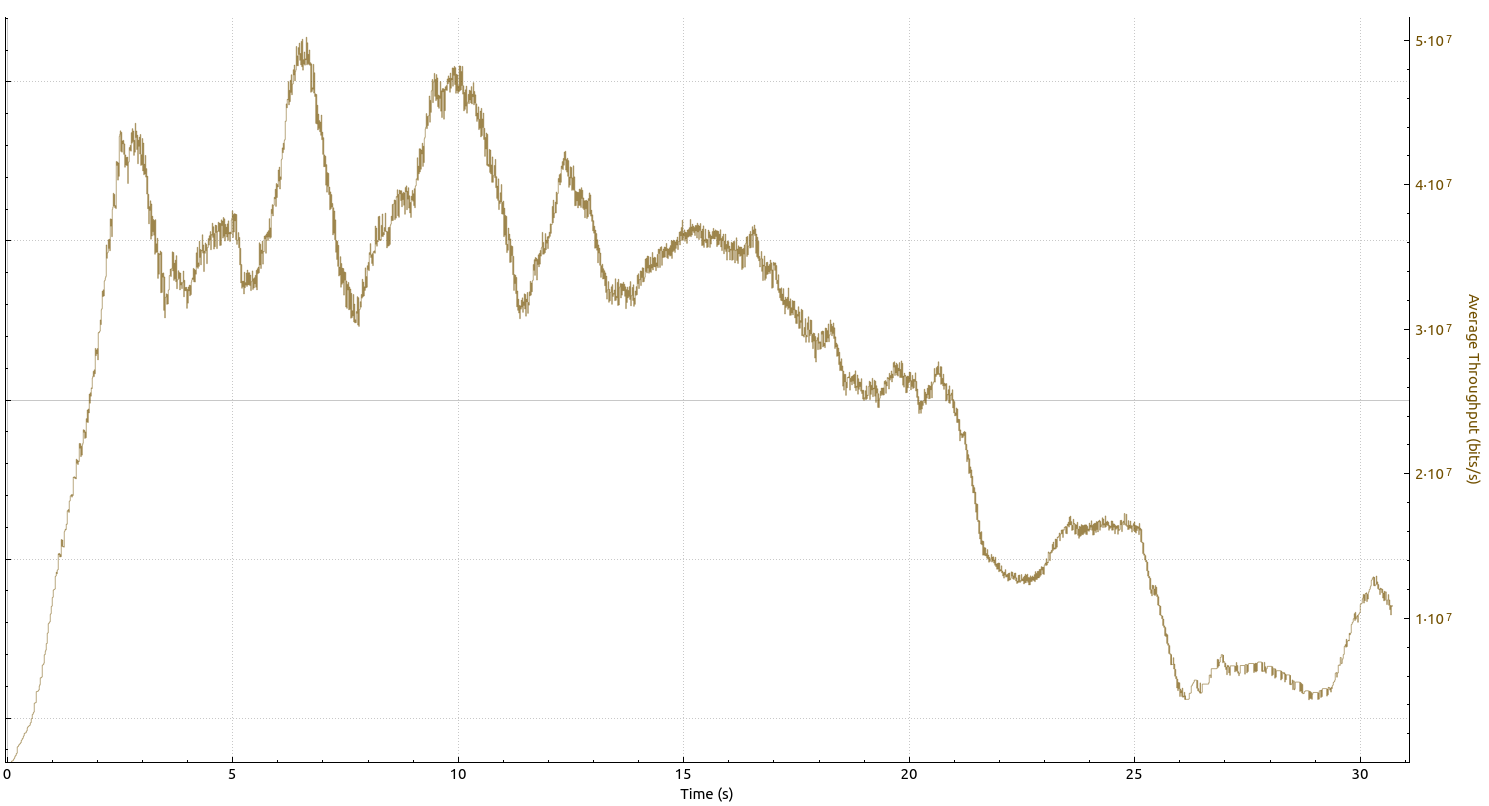

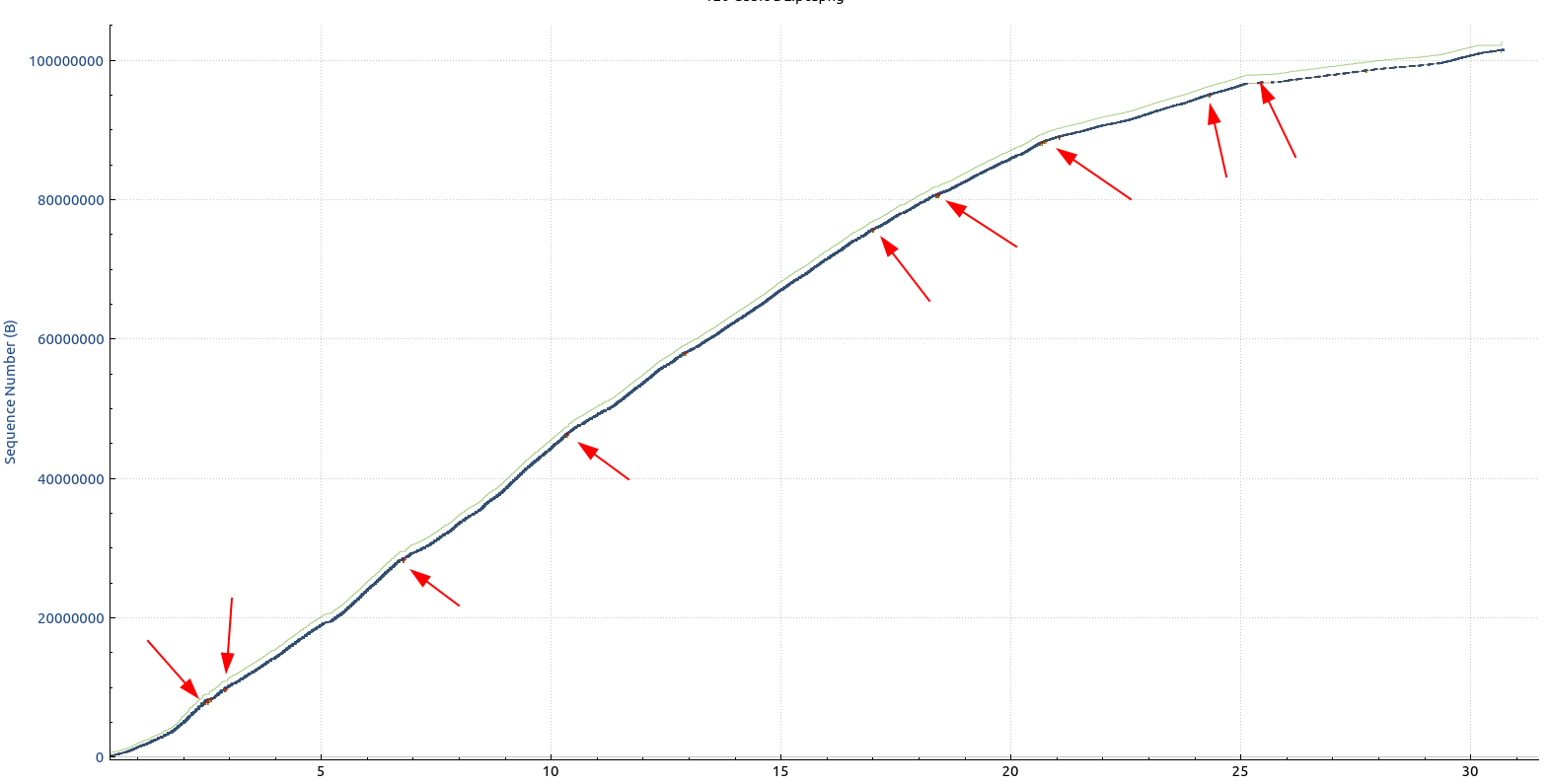

The graph above shows the TCP throughput over Starlink when using the standard cubic congestion avoidance algorithm. It looks pretty similar as the graph in the previous post with the BBR congestion avoidance algorithm. However, there is one significant difference: While BBR was able to keep the speed well above 120 Mbps on average, Cubic falls way short and on average, a 30 seconds iPerf3 data transfer ends up at an average of around 20 Mbps. The graph below shows that there are far fewer missing packets in this 30 seconds transmission compared the BBR version in the previous post, but Cubic significantly throttles back as a result and never really recovers.

For more details, right click on the graphs, open them up in a new tab and zoom in.

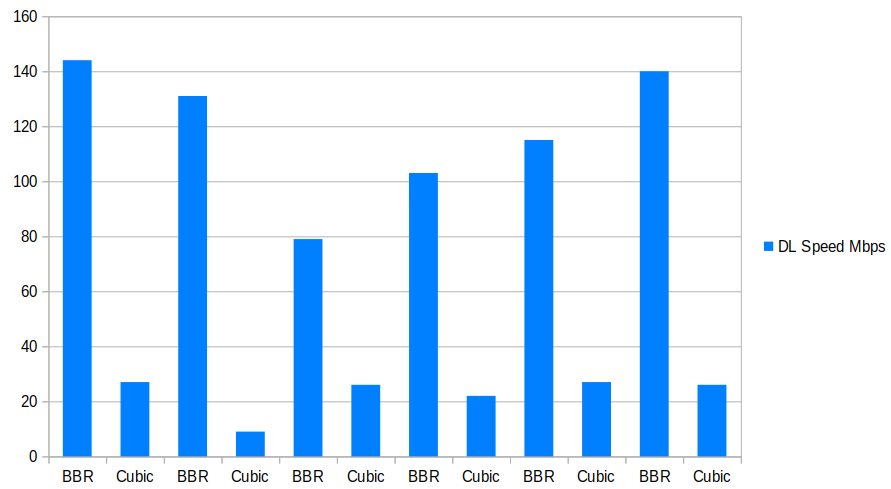

So perhaps the channel was just bad the minute I ran the test!? To rule this out, I ran sequential 30 second speed tests, alternating BBR and Cubic on both sides. Also, I wanted to rule out any influence of the test location, so I ran 3 cycles in one place and the next 3 cycles at a different location. Here’s how the result looks like:

The graph shows clearly that the data throughput over the satellite link is very variable over time, and it also shows how much superior BBR is over the standard Cubic congestion avoidance algorithm. In five out of six iperf3 runs, BBR could push the average throughput well over 100 Mbps, while during five out of six iperf3 runs with Cubic, average throughput barely goes beyond the 20 Mbps line.

But is it really packet loss? While running those downlink throughput tests, I kept an ICMP ping running, and not a single of those packets was lost during all tests. So perhaps it’s packet reordering as a result of retransmission and late reassembly of smaller air interface packets below the IP layer? That’s hard to tell as I could not find any information on the Starlink air interface on the net. I had a look at the Wireshark trace, but could only see that after up to 18 DUP acks (!), a missing packet was finally delivered once. In three different places where packet loss occurred, the missing packet was finally delivered after 35, 60 and 75 ms. That speaks against the idea that the lower layers deliver the IP packet once recovered. If that was the case, there would be duplicate packets. Perhaps it’s just a matter of chance, as that 30 seconds trace contains 25.600 packets from the server and only 30 are ICMP ping downlink packets. And indeed, out of those 25.600 packets from the server, only 15 were marked by Wireshark as ‘TCP Previous segment not captured’. That’s one packet every 2 seconds that was lost or 1 packet every 1706 packets, or 0.0586%. That’s not much, but it seems it’s enough to make Cubic stall.

Wow, I was very surprised by this result, I would have expected the difference to be much smaller. Unfortunately, setting the TCP stack to BBR on the receiver side won’t help for the downlink, it needs to be set on the server side. And obviously, one usually doesn’t have control over the server side in most scenarios. Which leads directly to the next question: How well does Starlink’s uplink work with Cubic vs. BBR? Stay tuned for the next part.

And yes, many services today run over UDP. There’s QUIC for example used by Youtube and others, many VPNs and of course real-time voice services. So how well do they cope with the variable channel and packet loss? Well, I guess that will require a number of further posts.

Are you able to detect if the system uses any PEPs ?

Hi Glen,

Hm, based on the huge throughput difference between CUBIC and BBR, I would speculate that they don’t use a performance-enhancing proxy. But I haven’t looked for signs of it.

There are different kind of PEPs, but none of them would seem to do any good in Starlink’s scenario, i.e. packet loss. Latency is very small, so no PEP required. The Snoop PEP aims at preventing TCP receive windows becoming too small. That’s also not a problem, as even for the slower Cubic, the TCP receive window (green line) is never touched. And D-Proxy needs to be deployed on both sides, so that’s no option for Starlink either. So, no probably not 🙂

Martin