I'm always a bit shocked when I hear people saying that “Google and others track you anyway on your smartphone and there is nothing that can be done about it”. I sense a certain frustration not only on the side of the person who's made the statement. But I obviously beg to differ. As this comes up quite frequently I decided to put together a blog post that I can then refer to with the things that I do on my Android based device to keep my private data as private as possible.

The Three Cornerstones to Privacy

In essence, my efforts to keep my private data private is based on three cornerstones:

- As few apps on the device as possible that communicate with servers on the Internet without my consent.

- Allowing access to private information such as location, calendar entries, the address book, etc. to specific apps while blocking access for all others by default.

- Preventing communication of apps with servers on the Internet that I would like to use. Amazon's Kindle reader app is a prime example. It's a good app for reading books but if left on it's own it's far too chatty for my taste.

In some cases, implementing these cornerstones in practice is straight forward while other things require a more technical approach. The rest of this blog entry now looks at how I implement these cornerstones in practice.

CyanogenMod

For a number of blocking and monitoring approaches described below it's necessary to get root rights on the device. This is nothing bad and there's a difference between rooting and jail breaking as I described in this blog post. One way to conveniently get root rights is to install an alternative Android OS on the device. CyanogenMod is my distribution of choice as it is mainstream enough for good support and stability and has no Google apps installed by default. As a user, I can then decide to install only those Google apps I require such as the Google Play store. Apart from the app store, the Play Services framework and the Google keyboard there's little else Google specific I have installed. Sure, there's additional stuff in the basic OS that wants to talk to Google servers but these parts can also be silenced as shown below.

Privacy Guard

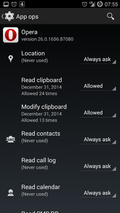

By default, CyanogenMod is shipped with the 'Privacy Guard' feature that allows to restrict access on a per app basis to lots of different sources of private information such as the calender and address book, the GPS receiver, etc. So even if an app requires access to the address book to install properly, access can still be removed later on. A good example is the app of the German railway company which wants to have access to my address book and my location so it can help me to find nearby stations easier. It's a noble goal but I don't want to it to have access to my private data. With Privacy Guard restricting access the app still works but only sees an empty address book, doesn't get a position fix when it asks for it without crashing or acting up in strange ways. In practice I've configured Privacy Guard to ask me when newly installed apps want to access any kind of private information so I can either allow or deny it temporarily or permanently so I won't be bothered by requests anymore in the future. Also, I went through the list of already installed apps and removed access rights for many of them to information sources I don't want those apps to have access to.

Using an Alternative Web Browser

Most people just use Google's default Chrome browser on an Android device and are thus freely sharing everything they do on the web from their mobile device with Google. And yet is is so easy to install an alternative browser such as Opera or Firefox. Personally I prefer Opera even though it is not open source. But at least it does not talk to Google all the time and it has a great text-reflow feature that I personally miss in all other alternatives I tried so far.

Using an Alternative Search Engine

Most web browsers, even alternative one's use Google for web searches by default, giving Google yet another way to collect private information about users. But it's easy to use other search engines such as DuckDuckGo, Yahoo/Bing etc., I'm still giving away private information through my search queries but at least it ends up in other hands.

Blocking Unwanted Communication

Despite using Privacy Guard, alternative web browsers and search engines there's still a lot of communication going on between apps and servers of Google, Amazon and others. The only way to control these short of not installing them is to block communication to those servers. A way to conveniently do this is to block communication to them via the 'hosts' file. Modifying the 'hosts' file requires a terminal program and root access, both of which come packaged with CyanogenMod. I've put my hosts file and scripts to activate and deactivate blocking on Github so you don't have to start from scratch. Have a look at this blog post for details. With this in place there's very little traffic going to servers I don't like it to go and further down in the post I describe how I occasionally check that this is still the case.

GPS-only Location

While it is admittedly convenient to send information about Wi-Fi networks at my location and the current Cell ID to Google's location services for a quick location fix it also allows Google to track me. Yes, they say the data is processed anonymously but I still don't like it. And I don't have to because GPS-only location provides a quick location fix as well these days. Unfortunately, some devices use Google's Secure User Plane Location (SUPL) server and include my subscriber id (IMSI) and location information in the request. Many devices, however, use other services to help the GPS chip to find the satellites more quickly which seem to be less nosy. For details have a look at the the blog posts here and here.

Owncloud for Synchronization

Naturally I don't want to share my address book and calendar with Google, Microsoft or any other big company for that matter. As a consequence I only used a non-synchronized calendar on my PC and an address book on a smartphone. And then Owncloud came along and made me make peace with 'the cloud', as my cloud services are at home. Since having an Owncloud server at home I enjoy calendar and address book synchronization between all my devices without the need to share my data with a cloud service provider.

Open Street Maps

Yes, and you might have guessed by now I also don't want Google to know where I'm going. I thus do not use Google Maps for street or car navigation. Instead I use Open Street Maps for Android (Osmand). It's a great app and I used it for car navigation for the past two years now. Better still, all maps are on the device so my smartphone doesn't have to frequently download maps data from the web while driving. The downside is that I don't have traffic jam notifications but I'm willing to trade that for my privacy.

Mobile eMail

Needless to say that Google is not my email provider either. Instead I use a medium sized German company for the purpose and Thunderbird on the PC. On my Android smartphone I use the open source K9 email program to manage my emails. It's forked from Google's email program and works pretty much the same way, except it's not talking to Google of course.

Music and Videos On My Mobile

Again, I prefer the open source VLC player to other pre-installed products, even to CyanogenMod's Apollo. It plays just about any audio and video format I throw at it without the need to talk to anyone on the web.

Seeing is Believing

And finally, it's important to check what kind of unwanted communication is still going on in the background after having taken the steps above. This can then be used to update the 'hosts' file or take some further measures. Tracing IP traffic to and from the device can be done in several ways. One way is to use a Wi-Fi access point and connect it to the Internet via the Ethernet socket of a PC which is configured for Internet sharing. With Wireshark on the PC, all traffic from the smartphone via the Wi-Fi access point can then be monitored. Another approach is to use a Raspberry Pi as a Wi-Fi access point and then use tcpdump running on the Pi and Wireshark running on a PC that is also connected to the network to inspect the phone's data exchange. Another interestion option is to run tcpdump directly on the smartphone and inspect the resulting dump file with Wireshark on a PC. This way, one can even collect IP packets that are transferred over the cellular interface.

Final Words

Purists will argue now that even when all measures are taken things are far from perfect. I tend to agree but there far less of my private data being extracted from my smartphone without my consent or, at least, without my awareness.

Quite often I'm in multi-day meetings with lots of participants. In recent years more and more hotels have bought Wi-Fi equipment that can handle 80-100 participants with twice the number of Wi-Fi devices in a single room. Unfortunately, there's still ample opportunity to be trapped in meetings for several days where the Wi-Fi fails once the room starts filling up. For such cases I've developed a solution that can fix the issues for everyone but unfortunately it's not always possible to put it into action.

Quite often I'm in multi-day meetings with lots of participants. In recent years more and more hotels have bought Wi-Fi equipment that can handle 80-100 participants with twice the number of Wi-Fi devices in a single room. Unfortunately, there's still ample opportunity to be trapped in meetings for several days where the Wi-Fi fails once the room starts filling up. For such cases I've developed a solution that can fix the issues for everyone but unfortunately it's not always possible to put it into action.