In the past I have reported that my Nokia N95 had the nasty habbit of rebooting spontaneously while using OperaMini and moving from cell to cell e.g. while traveling on the train and in the metro. How often the mobile rebooted semt to depend on the country, i.e. which mobile network I used, i.e. which network vendor supplied the infrastructure. This week I noticed that my N95 no longer reboots in the metro. That is interesting, since I haven’t made a software update and the Opera Mini version is still the same. So it seems like the network operator, Orange France in this case, must have made a software upgrade in the network or has changed some parameters. In any case, mobile Internet use has become much more practical again for me in France. Thanks to whoever fixed it.

Category: Uncategorized

Carnival of the Mobilists 130 at London Calling

Another week, another Carnival of the Mobilists. It’s my great pleasure to point you to Andrew Grill’s blog where you can find the latest edition of the Carnival of the Mobilists which has the latest on what has happened in the blog space on everything mobile in the past week. So don’t hesitate and head over and enjoy.

The Symbian Foundation: Will It Make A Difference For Developers?

A lot has been written lately about Nokia buying the Symbian shares of Sony-Ericsson and others and creating the Symbian Foundation to release the OS as open source in the future. A lot of people become ecstatic when they hear ‘Open Source’ as it seems to be a synonym for success and the only way to go. However, there are different kinds of open source approaches and usage licenses so it is worth to consider what developers will be able to do with Open Symbian that they can’t do today.

I think the big difference to Linux, which is also open source and has attracted many individuals and companies to start their own distribution, is that I think it is unlikely the same will happen with Open Symbian in the mobile space. In the PC world, the hardware is well standardized so people can easily modify the kernel and compile and run it on their machine. In the mobile world however, hardware is very proprietary so I think it is unlikely that the same will happen here, no matter how open the Symbian OS becomes. Therefore, an open Symbian is mainly interesting for hardware manufacturers as they will have easier access to the OS and can customize it more easily to their hardware. That’s a long way from ‘I don’t like the current OS distribution on my mobile so I download a different one from the Internet and install it on my phone’. But maybe we are lucky and open sourcing the OS will allow application programmers to use the OS more effectively and extend it in ways not possbile today due to the lack of transparency.

For more thoughts on what the Symbian Foundation might or might not change in practice, head over to Michael Mace and AllAboutSymbian, they’ve got a great insights on their blogs from a lot of different angles.

Blackberry Impressions

Location: A restaurant in Miami Beach and I am surrounded by Blackberry and a couple of Danger hiptop users! And no, these people are not the typical business users that used to carry the Berries exclusively only a short while ago.

I’ve noticed a similar trend at the conference I attended in Orlando last week. Most people had a Blackberry with them, nothing else, a bit of American monoculture. About half of them had a company Berry while the others bought the devices themselves because they see the usefullness of having mobile eMail. Each and everyone I asked also used the device for mobile web access and most of them used Facebook. And we are not talking about the teens and twens of Miami Beach here but of mothers and fathers in their thirties and forties.

Two very different and very interesting directions for the Berries and the mobilization of the Internet!

Can 300 Telecom Engineers Share a 1 Mbit/s Backhaul Link?

I am sitting in a Starbucks in Miami after an intensive conference week right now and starting to reflect on what I have seen and noted during the week. One of the straight forward things that comes to mind about last week is that conference organizers, especially in the high tech sector, have to ask about the details of Internet connectivity of the place they want to use. Just having Wi-Fi in a place is not enough, capacity on the backhaul link is even more important. In our case, 300 people were rendered without a usable Internet connection for the week because the backhaul was hopelessly underdimensioned for the load. When I arrived as one of the first on Sunday, the best I got was about half a megabit per second. During the week it was a few kbit/s at best. eMails just trickled in and using the Internet connection for Voice calls was impossible.

While some might see this just an inconvenience and argue that you should concentrate on the conference anyway there are others, like me, that require to answer a couple of eMails and call people throughout the day to keep the normal business going. So instead of making free calls, I and many others had to fall back on their mobile phones and paid a dollar/euro or more per minute due to high roaming charges. The extra cost of that to the company multiplied by 300 is significant. Last year, same conference, different venue there was an 8 MBit/s backhaul link and things ran a lot smoother. But I guess by next year, even that will not be good enough anymore to keep things going when 300 engineers arrive.

P.S.: Good that I had my AT&T prepaid SIM card. With the MediaNet add-on I could access the net and get to my eMails via AT&T’s EDGE network. Definitely not at multimegabit speed but a lot faster than over the hotel’s Wi-Fi.

How many Gold Subscribers Can You Handle

While well dimensioned 3G networks are offering fast Internet access today, some somewhat underdimensioned networks show the first signs of overload. Some industry observers argue that the answer is to introduce tiered subscriptions, i.e. the user gets a guaranteed bandwidth or a higher bandwidth if he pays more. But I am not sure that this will work well in practice for two reasons: The first reason is that when some users are preferred over others in already overloaded cells, the experience for the majority gets even worse. And second, if such higher priced subscrptions get more popular because the standard service is no good, it won’t be possible at some point to statisfy even these subscribers. So such gold subscriptions just push out the problem a bit in time but otherwise don’t help a lot. There is just no way around sufficient capacity or your subscibers will migrate to network operators who have made their homework. So instead of only investing in QoS subscription management I would rather also invest in analysis software that reports which cells are often overloaded. That gives the operator the ability to react quickly and increase the bandwidth in the areas covered by such cells. Having said all of this, what do you think?

3 UK Data Roaming Performance

Here I am, back in Italy and again using my 3 UK SIM for Internet access, since there are no data roaming charges between 3 networks in different countries. Very interesting and also a bit depressing to see the performance throughout the day. Sunday morninig 8 am seems to be a pretty quiet time and I easily got 1.5 MBit/s in downlink. Evenings seem to be high time, with data rates dropping to less than 300 kbit/s and long page loading times due to lots of lost packets. I am pretty sure it’s not a cell overload since the Wind UMTS network at the same locations easily gives me 1 MBit/s and no packet loss in direct comparison. So the bottleneck is either the link back to the home network or the GGSN in the UK .

While it’s good to see the networks being used and affordable data roaming in place, I’d appreciate sufficient capacity in the core network.

Putting The Hotel TFT To Good Use

Since I travel a lot, I often stay in hotels. One thing in hotel rooms I could live without is the TV set, as I never have the desire nor the time to watch anything anyway. In recent years, however, I've noticed that good old cathode ray tube TV's are giving way to TFT TV's. Last week I took a closer look and noticed that these TV's usually also have a VGA or DVI input. Excellent, now I can finally put them into good use and connect them to my notebook as a second screen. All that is needed is a VGA or DVI cable, which I will take with me from now on. The picture on the left shows how my typical hotel setup looks like. Screen 1, screen 2 and a 3.5G HSPA modem for Internet connectivity. Almost as good as at home 🙂

S60 Power Measurements

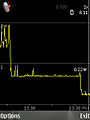

In a comment to a recent blog entry somebody left a link to an interesting S60 utility that records power consumption and other interesting technical information while Noka NSeries and other S60 based devices execute programs. Running in the background, one can perform actions and see the impact on power consumption. The picture on the left for example shows power consumption during different states during mobile web browsing.

The left side of the graph (click to enlarge) shows power consumption while a web page is loaded, i.e. while the device is in Cell-DCH / HSPA state. Power consumption is at almost 2 watts during this time. Once no more data is transferred, the mobile is set into Cell-FACH state by the network which requires much less power. However, the 0.8 watts is still significant. After about 30 seconds of inactivity the network releases the physical bearer and only maintains a logical connection. In this state, power requirements drop to about 0.2 watts which is mostly used for driving the display and the background light. When the device is locked and the background light is switched off, the graph almost drops to the bottom, i.e. to less than 0.1 watts.

An excellent tool to gain a better understanding of power requirements of different actions and processes!

Wireless and Mission Critical

I am on the road quite often and, as most of you have figured out in the meantime, a heavy user of 3G networks for Internet access. While I generally like the experience some network outages like this two and a half day nationwide full Internet access blackout in the Vodafone Germany network recently sends shivers down my spine. After all, we are not talking about a third class operator but one that claims to be a technology leader in the sector. As I use Vodafone Internet access a lot I was glad I was only impacted for half a day, having been in a DSL save haven for the rest of the time. If I had been on the road, however, this would have been a major disaster for me.

I wonder if the company that delivered the equipment that paralyzed the Vodafone network for two and a half days has to pay for the caused damage!? If it’s a small company then such a prolonged outage with millions of euros in lost revenue can easily put them out of business. And that doesn’t even consider the image loss for all parties involved and the financial loss of companies relying on Vodafone to provide Internet access. The name of the culprit was not released to the press but those working in the industry know very well what happened. Hard times for certain marketing people on the horizon…

Vodafone is certainly not alone facing such issues as I can observe occasional connection issues with other network operators as well. These, however, are usually short in nature and range from a couple of minutes to an hour or so. Bad enough.

To me, this shows several things:

- There is not a lot of redundancy built into the network.

- Disaster recovery and upgrade procedures are not very well thought trough as otherwise such prolonged outages would not happen.

- Short outages might be caused by software bugs and resetting devices.

- I think we might have reached a point where capacity of core network nodes have reached a level that the failure of one device triggers nationwide outages.

So maybe operators should start thinking in earnest about reversing the trend a bit and consider decentralization again to reduce the effect of fatal equipment failures. And maybe price should not be the only criteria to be considered in the future. Higher reliability and credible disaster recovery mechanisms which do not only work on paper might be worth something as well. An opportunity for network vendors to distinguish themselves?