Ever since I got my first Raspberry Pi I wondered how much power it really requires in my standard configuration, i.e. only with an Ethernet cable and an SD card inserted. Recently I got myself a USB power measurement tool to find out. As you can see in the picture on the left, the Raspberry Pi draws a current of 400 mA with the OS up and running and being idle. With a measured USB voltage of 5.4 V, the resulting power consumption is 2.16 Watts. At an efficiency of 90% of the power adapter itself, the total power consumption is therefore around 2.4 Watts.

Ever since I got my first Raspberry Pi I wondered how much power it really requires in my standard configuration, i.e. only with an Ethernet cable and an SD card inserted. Recently I got myself a USB power measurement tool to find out. As you can see in the picture on the left, the Raspberry Pi draws a current of 400 mA with the OS up and running and being idle. With a measured USB voltage of 5.4 V, the resulting power consumption is 2.16 Watts. At an efficiency of 90% of the power adapter itself, the total power consumption is therefore around 2.4 Watts.

Author: Martin

Automating The Update of the Android ‘Hosts’ File to Block Ads And Other Stuff

Recently, I wrote two posts (see here and here) on how to use the Android/Linux 'hosts' file to block advertising and unwanted automatic OS downloads. Since then I've taken the approach further and have put the 'hosts' file for blocking on Github from where it can be downloaded by anyone.

Recently, I wrote two posts (see here and here) on how to use the Android/Linux 'hosts' file to block advertising and unwanted automatic OS downloads. Since then I've taken the approach further and have put the 'hosts' file for blocking on Github from where it can be downloaded by anyone.

To simplify usage I've put together a couple of scripts:

One script that runs in a terminal app on Android can be used for occasional updates of the blocking list. The script would have been a no-brainer but unfortunately the Android Busybox 'wget' implementation does not support https. Github, however, rightly insists on using https. So I had to find a different solution. The solution I found is to use 'curl'. Fortunately, the curl developers provide binaries for a number of operating systems, including Android here. The zip file for Android contains 'curl' and 'openssl' which have to be copied to '/system/bin' on a rooted Android device. The script to download the hosts file and copy it to '/etc/hosts' then looks as follows:

mount -o rw,remount /system

cd /etc

mv hosts.blocked hosts.blocked.bak

curl -k https://raw.githubusercontent.com/martinsauter/Mobile-Block-Hosts-List/master/hosts.blocked >hosts.blocked

cp hosts.blocked hosts

mount -o ro,remount /system

In addition, 'ho.sh' (hosts — open) puts the original 'hosts' file back in place to disable blocking while 'hb.sh' (hosts — block) overwrites the 'hosts' file with a local copy of the blocking list to switch blocking back on again.

Simple, effective and quick!

Still Early Days for TIM’s LTE Network in Italy

I recently traveled to Italy and since my home network operator has an LTE roaming agreement with TIM, I could of course not resist to try out their LTE network. While many network operators have started with LTE quite a while ago and are now in the process of optimizing their networks, it seems TIM is not as far down that road yet. Both in Udine and Mestre I got LTE coverage from a 10 MHz carrier on the 800 MHz band. When making phone calls, the LTE network would always send my device to the GSM network and not in to the also existing UMTS network. This is quite a shame since it increases the call setup time and also denies me a data connectivity during the call. Hm, I wonder if they have optimized their network next time I have a look because this looks like early days.

VLC on Android for Down to Earth Music Listening

While I like music streaming when I'm at home, things are quite different when it comes to listening to music on my smartphone. Here, I don't have a large library of individual tracks ripped from CDs or other sources, it would be too much work… Also, I don't use online streaming services while I'm on the go as the amount of data I have on my mobile contract per month is limited, the battery goes flat much too quickly and there are spots on my daily commute where I don't have coverage. So what I have on my mobile are recordings of the radio streams I like, each spanning several hours. The player to play these must only do one thing: play them. No album art, no database lookups, just play and play them instantly.

Unfortunately, pretty much all native players I've come accross. Especially the CyanogenMod Apollo player has problems with my recordings as it takes at least 10 seconds before it starts playing. I have no idea what it does in the meantime. So I had to go on the lookout for another player app that meets my needs and I had to find out that there's a large assortement, but all not to my (privacy) taste. But then I stumbled over a player I use on my notebook as well that fits perfectly: VLC. It's open source, simple to use, there's no album art lookups and privacy leaks, it plays pretty much any audio and video codec ever invented and it plays my files without any delay. Perfect!

More Background On SUPL, A-GPS, the Almanac and Ephemeris Data

After my previous posts on how to trace and analyze A-GPS SUPL requests (see here and here) I thought I'd also write a quick post with some references to more details on the parameters that are contained in an A-GPS SUPL message. When discussing GPS, two terms are regularly mentioned, The 'Almanac' and the 'Ephemeris data'. Here's a link to some background information on those terms and here's my abbreviated version:

Almanac: This information is broadcast by each GPS satellite and contains rough orbital parameters of each satellite. This information helps a GPS receiver to find other satellites during its startup procedure once it has decoded this information from a downlink signal. Note that this information is NOT contained in a SUPL response as it only gives long term rough orbital parameters that can only be used for satellite search but not for navigation.

Ephemeris: These are the precise orbital parameters of a satellite which is only valid for a short amount of time. While each satellite broadcasts the Almanac of all satellites, a satellite only broadcasts its own Ephemeris data. SUPL responses contain the Epehemeris data of all satellites the SUPL server thinks might be visible at the rough location a mobile device is currently located, perhaps, I'm not sure, in order of certainty, as the satellite IDs in the list were not ordered. I've also run a SUPL request with a cell-id that the SUPL server did not know and as a result the server returned a very long list of Ephemeris data with ordered satellite ID numbers, probably of all the satellites it knew.

And finally, here's a link to additional background information on the individual Epehemeris parameters.

How To Block Software Updates While Traveling On My VPN Access Point

In a previous post I've described how today's smartphones and tablets take Wi-Fi connectivity as an invitation for downloading large amounts of data for software updates and other things without user interaction. This is particularly problematic in Wi-Fi tethering scenarios and when using slow hotel Wi-Fi networks when traveling. But at least in the later case I can fix things by adding banned URLs in the 'hosts' file on my Raspberry Pi based VPN Wi-Fi Travel Router. After changing the hosts file, a quick restart of the DNS server on the router via 'sudo service dnsmasq restart' is required.

Over time, my hosts file has grown quite a bit since I first started to use it as a line of defense against unwanted advertising, privacy invasions and software downloads. Here's my current list:

127.0.0.1 localhost

#Prevent the device to contact Google all the time

127.0.0.1 mtalk.google.com

127.0.0.1 reports.crashlytics.com

127.0.0.1 settings.crashlytics.com

127.0.0.1 android.clients.google.com

127.0.0.1 www.googleapis.com

127.0.0.1 www.googleadservices.com

127.0.0.1 clients3.google.com

127.0.0.1 play.googleapis.com

127.0.0.1 www.gstatic.com

127.0.0.1 ssl.google-analytics.com

127.0.0.1 id.google.com

127.0.0.1 clients1.google.com

127.0.0.1 clients2.google.com

#Amazon is really nosy, too…

127.0.0.1 www.amazon.com

127.0.0.1 s.amazon-adsystem.com

127.0.0.1 api.amazon.com

127.0.0.1 device-metrics-us.amazon.com

127.0.0.1 device-metrics-us-1.amazon.com

127.0.0.1 device-metrics-us-2.amazon.com

127.0.0.1 device-metrics-us-3.amazon.com

127.0.0.1 device-metrics-us-4.amazon.com

127.0.0.1 device-metrics-us-5.amazon.com

127.0.0.1 device-metrics-us-6.amazon.com

127.0.0.1 device-metrics-us-7.amazon.com

127.0.0.1 device-metrics-us-8.amazon.com

127.0.0.1 device-metrics-us-9.amazon.com

127.0.0.1 device-metrics-us-10.amazon.com

127.0.0.1 device-metrics-us-11.amazon.com

127.0.0.1 device-metrics-us-12.amazon.com

127.0.0.1 mads.amazon.com

127.0.0.1 aax-us-east.amazon-adsystem.com

127.0.0.1 aax-us-west.amazon-adsystem.com

127.0.0.1 aax-eu.amazon-adsystem.com

#No need for Opera to call home all the time

127.0.0.1 mini5-1.opera-mini.net

127.0.0.1 sitecheck1.opera.com

127.0.0.1 sitecheck2.opera.com

127.0.0.1 thumbnails.opera.com

#Some more 'services' I don't need

127.0.0.1 audioscrobbler.com

127.0.0.1 weather.yahooapis.com

127.0.0.1 query.yahooapis.com

127.0.0.1 platform.twitter.com

127.0.0.1 linkedin.com

#Prevent automatic OS updates for a number of vendors

127.0.0.1 fota.cyngn.com

127.0.0.1 account.cyanogenmod.org

127.0.0.1 mdm.asus.com

127.0.0.1 mdmnotify1.asus.com

127.0.0.1 updatesec.sonymobile.com

#Ad blocking

127.0.0.1 ad8.adfarm1.adition.com

127.0.0.1 googleads.g.doubleclick.net

127.0.0.1 stats.g.doubleclick.net

127.0.0.1 mobile.smartadserver.com

127.0.0.1 www.google-analytics.com

127.0.0.1 pagead2.googlesyndication.com

127.0.0.1 ads.stickyadstv.com

127.0.0.1 pixel.rubiconproject.com

127.0.0.1 t1.visualrevenue.com

127.0.0.1 beacon.krxd.net

127.0.0.1 rtb.metrigo.com

127.0.0.1 c.metrigo.com

127.0.0.1 ad.zanox.com

127.0.0.1 cm.g.doubleclick.net

127.0.0.1 ib.adnxs.com

127.0.0.1 ih.adscale.de

127.0.0.1 ad.360yield.com

127.0.0.1 ssp-csynch.smartadserver.com

127.0.0.1 ad.yieldlab.net

127.0.0.1 dis.crieto.com

127.0.0.1 rtb.eanalyzer.de

127.0.0.1 connect.facebook.net

127.0.0.1 b.scorecardresearch.com

127.0.0.1 sb.scorecardresearch.com

127.0.0.1 ads.newtentionassets.net

127.0.0.1 ak.sascdn.com

127.0.0.1 fastly.bench.cedexis.com

127.0.0.1 probes.cedexis.com

127.0.0.1 x.ligatus.com

127.0.0.1 d.ligatus.com

127.0.0.1 a.visualrevenue.com

127.0.0.1 radar.cedexis.com

127.0.0.1 www.googletagservices.com

127.0.0.1 pubads.g.doubleclick.net

127.0.0.1 prophet.heise.de

127.0.0.1 farm.plista.com

127.0.0.1 static.plista.com

127.0.0.1 video.plista.com

127.0.0.1 tag.yoc-adserver.com

How To Trace An A-GPS SUPL Request

In a previous post I've described my results of tracing an A-GPS SUPL request from my mobile device to the Google GPS location service and the issues I've discovered. In this follow-up post I thought I'd give an overview of how to setup up an environment that allows to trace and decode the conversation.

Does Your Device Send SUPL Requests Over Wi-Fi?

The first thing to find out before proceeding is whether a device sends SUPL requests over Wi-Fi at all. It seems that some devices don't and only use a celluar connection as SUPL requests are sent directly from the cellular baseband chip.

The first thing to find out before proceeding is whether a device sends SUPL requests over Wi-Fi at all. It seems that some devices don't and only use a celluar connection as SUPL requests are sent directly from the cellular baseband chip.

To trace SUPL on Wi-Fi there must be a point between the mobile device and the backhaul router to the Internet on which Wireshark or Tcpdump can be run to record the traffic. I've used my Raspberry Pi based VPN Wi-Fi Travel Router for the purpose. If you have an Android based device that is rooted it's also possible to run tcpdump directly on the device. The first screenshot on the left shows a DNS query for supl.google.com followed by an encrypted SUPL request to TCP port 7275 that you should see before proceeding. Note that a SUPL request is only done if the device thinks that the GPS receiver requires the information. A reliable way to trigger a SUPL request on my device was to reboot if I didn't see a SUPL request after starting an application that requests GPS information such as Osmand.

The Challenge

As the name suggests, the Secure User Plane Location (SUPL) protocol does not send plain text messages. Instead, a Secure Socket Layer (SSL) connection is used to encrypt the information exchange. The challenge therefore is to figure out a way to decrypt the messages. If you are not the NSA, the only chance to do this is to put a proxy between the SUPL client on the mobile device and the SUPL server on the Internet. This splits the single direct SSL Connection between client and server into two SSL connections which then allows to decrypt and re-encrypt all messages on the proxy. At first, I hoped I could do this with MitmProxy but this tool focuses on web protocols such as https and would not not proxy SUPL connections.

Compiling and Running SUPL-PROXY

After some research I came across SUPL-PROXY by Tatu Mannisto, a piece of open source software that does exactly what I wanted. Written in C it has to be compiled on the target system and it does so without too much trouble on a Raspberry Pi. The only thing I had to do in addition to what is described in the readme file is to set a path variable after compiling so the executable can find a self compiled library that is copied to a library directory. In essence the commands to compile supl-proxy, a couple of other binaries and to set the command variables are as follows:

#untar/zip and compile

mkdir supl

cd supl

tar xvzf ../supl_1.0.6.tar.gz

cd trunk

./configure –precompiled-asn1

make

sudo make install

#now set LD_LIBRARY_PATH. It does not seem to be used onthe PI as it is empty

echo $LD_LIBRARY_PATH

LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib

echo $LD_LIBRARY_PATH

export LD_LIBRARY_PATH

First Test with SUPL-CLIENT

Before proceeding, it's a good idea to check if the supl package is working. This can be done by using SUPL-CLIENT which will send a SUPL request to a SUPL server. The command below uses a network and cell id in Finland:

supl-client –cell=gsm:244,5:0x59e2,0x31b0 –format human

If the request was successful the output will look as follows:

Reference Location:

Lat: 61.469997

Lon: 23.807695

Uncertainty: 59 (2758.0 m)

Reference Time:

GPS Week: 782

GPS TOW: 5879974 470397.920000

~ UTC: Fri Aug 22 10:39:58 2014

Ephemeris: 30 satellites

# prn delta_n M0 A_sqrt OMEGA_0 i0 w OMEGA_dot i_dot Cuc Cus Crc Crs Cic Cis toe IODC toc AF0 AF1 AF2 bits ura health tgd OADA

[…]

Creating A SUPL-PROXY SSL Certificate

One more thing that needs to be done before SUPL-PROXY will run is to create an SSL certificate that it will send to a supl client during the connection establishment procedure. This is necessary as the proxy terminates the SSL connection to the client and creates a second SSL connection to the 'real' SUPL server on the Internet. This is done with a single command which creates a 'false' certificate for supl.google.com:

supl-cert supl.google.com

A Copy SSL Of The Server Certificate For The Client Device

The command above will create four files and one of them, srv-cert.pem, has to be compied to the mobile device and imported into the certificate store. For details, have a look at the description of how this is done for mitmproxy, a piece of software not used here, but which also requires this step.

What I noticed when I used SUPL-PROXY with my Android device was that the supl client did not check the validity of the server certificate and thus this step was not necessary. This is a serious security issue so if you want to know if your device properly checks the validity of the certificate don't copy the certificate to the mobile device and see if the SUPL request is aborted during the SSL connection establishment. Another approach is to first install it to be able to trace the SUPL request and later on remove the certificate again from the mobile device to see if SUPL requests are then properly aborted during the connection establishment phase.

Starting SUPL-PROXY

Once done, SUPL-PROXY can be started with the follwoing command:

supl-proxy supl.nokia.com

This will start the proxy which will then wait for an incoming SUPL request and forward it to supl.nokia.com. Note that the incoming request from the client is for supl.google.com and the outgoing request will be to supl.nokia.com. This is not strictly necessary but makes the redirection of supl.google.com in the next step easier and also shows that Google's and Nokia's SUPL servers use the same protocol.

Redirecting SUPL Requests To The Proxxy

At this point all SUPL requests will still go directly to Google's server and not the local supl proxy so we need to redirect the request. If you have a rooted Android device you can modify the /etc/hosts file on the device and point supl.google.com to the IP address of the device on which SUPL-PROXY waits for an incoming request (e.g. 192.168.55.17 in the example below). Another option is to make the DNS server return the IP address of the device that runs SUPL-PROXY. In my setup with the Raspberry Pi VPN Wi-Fi Router this can be done by editing /etc/hosts on the Raspberry Pi router and then restarting the DNS server. Here are the commands:

sudo nano /etc/hosts

–> insert the follwoing line: 192.168.55.17 supl.google.com

sudo service dnsmasq restart

Tracing And Decoding A SUPL Request with SUPL-PROXY

At this point everything is in place for the SUPL request to go to SUPL-PROXY on the lcoal device. After rebooting the mobile device and starting an app that requests GPS information, the request is now sent to SUPL-PROXY which in turn will open a connection to the real SUPL server and display the decoded request and response. Here's how the output looks like:

pi@mitm-pi ~ $ supl-proxy supl.nokia.com

Connection from 192.168.55.16:48642

SSL connection using RC4-MD5

Client does not have certificate.

mobile => server

<ULP-PDU>

<length>43</length>

<version>

<maj>1</maj>

<min>0</min>

<servind>0</servind>

</version>

<sessionID>

<setSessionID>

<sessionId>5</sessionId>

<setId>

<imsi>00 00 00 00 00 00 00 00</imsi>

</setId>

</setSessionID>

</sessionID>

<message>

<msSUPLSTART>

<sETCapabilities>

<posTechnology>

<agpsSETassisted><true/></agpsSETassisted>

<agpsSETBased><true/></agpsSETBased>

<autonomousGPS><true/></autonomousGPS>

<aFLT><false/></aFLT>

[…]

The output is rather lengthy so I've only copy/pasted a part of it into this post to give you a basic idea of the level of detail that is shown.

Tracing And Decoding A SUPL Request with Wireshark

The SUPL-PROXY output contains all request and response details in great detail so one could stop right here and now. However, if you prefer to analyze the SUPL request / response details in Wireshark, here's how that can be done: While a SUPL request that goes to the 'real' SUPL server directly can't be decoded due to the use of SSL and the unavailability of the server's SSL key, it's possible to decode the SUPL request between the SUPL client and SUPL-PROXY as the server's SSL key is available. The SSL key was created during the certificate creation process described above. 'srv-priv.pem' contains the private key and can be imported in Wireshark as follows:

- In Wireshark select "follow TCP stream" of a supl conversation. The TCP destination port is 7275

- In the stream select "decode as –> SSL". The cipher suite exchange then becomes visible

- Right-click on a certificate exchange packet and select "Protocl Preferneces –> RSA Key List"

- Enter a new RSA key as shown in the first screenshot below. Important: The protocol is called "ulp", all in LOWERCASE (uppercase won't work!!!)

The second screenshot below shows how the output looks like once the SSL layer can be decrypted.

Have fun tracing!

How SUPL Reveals My Identity And Location To Google When I Use GPS

In a previous post I was delighted to report that assisted GPS (A-GPS) has become fast enough so I no longer have to rely on Google's Wi-Fi location service that in return requires me to send Wi-Fi and location data back to Google periodically. Unfortunately it turns out that the A-GPS implementation of one of my Android smartphones sends the ID of my SIM card (the IMSI) to the A-GPS server. From a technical point of view absolutely unnecessary and a gross privacy violation.

Read on for the details…

How Assisted GPS (A-GPS) works

To get a position fix, the GPS chip in a device needs to acquire the signal of at least three satellites. If the GPS chip is unaware of the identities of the satellites and their orbits this task can take several minutes. To speed things up a device can get information about satellites and their current location from an A-GPS server on the Internet. The single piece of information the server requires is a rough location estimate from the device. Usually a device is not aware of its rough location but it knows other things that can help such as the current cellular network id (MCC and MNC) and the id of the cell that is currently used. This information is sent to the A-GPS server on the Internet that then determines the location of the cell or network with a cell id / location database.The location off the cell or network is precise enough to assemble the satellite information that applies to the user's location which is then returned over the Internet connection. The satellite information is then fed to the GPS chip which can then typically find the signals of the GPS satellites in just a few seconds.

No Private Information Required

It's important to realize at this point that no personal information such as a user's ID is required in this process. The only information that can be traced back to a person, if the A-GPS client is implemented with privacy in mind, is the IP address from which the request was made to the server. In practice a mobile device is usually assigned a private IP address which is mapped to a public IP address from which the request seems to have originated. This public IP address is shared with many other users. Hence, only the network operator can identify which user has originated the request while the A-GPS server never gains any insight into who has sent the request.

The SUPL protocol and Privacy Breaching Information Fields

A standardized method for a device to gather A-GPS information from a server is the Secure User Plane Location protocol (SUPL). Several companies provide A-GPS SUPL servers answering requests on TCP port 7275 such as Google (supl.google.com) and Nokia/Microsoft (supl.nokia.com). In the case of my Android smartphone, supl.google.com is used. As the 'S' in 'SUPL' suggests, the protocol uses an encrypted connection for the request. As a consequence, using Wireshark without any additional tools to decode the request won't work as the content of the exchange is encrypted. Fortunately there's SUPL-PROXY, an open source piece of software by Tatu Mannisto that can be used in combination with domain redirection to proxy the SUPL SSL connection and decode the request and response messages. And on top, the SSL certificate generated by Tatu's software for the proxying can be fed into Wireshark which will then also decode the SUPL messages. And what I saw here very much disappointed me:

My SIM Card ID In The SUPL Request And No SSL Certificate Check

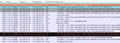

I almost anticipated it but I was still surprised and disappointing so see my SIM card's ID, the International Mobile Subscriber Identity (IMSI) in the request. This is shown in the first screenshot below. As explained above, the IMSI or any other personal information is not necessary at all for the request so I really wonder why it is included!? And just to make sure this is really the case I ran another test without a SIM card in the device and also got a valid SUPL return with the IMSI field set to 0's.

The second screenshot shows the cell id in the request which is required for the SUPL request. The IMSI in combination with the cell ID provides the owner of the SUPL server (i.e. Google in my case) a permanent personal identifier and as a consequence the ability to pinpoint and record my location whenever a SUPL request is made. And in this day and age, it's pretty certain that my network operator is not the only entity that is aware of my IMSI…

The third screenshot below shows the first part of the SUPL response which includes the location of the cell that served me when I recorded the SUPL request. Just type the two coordinates into Google search and you'll end up with a nice map of the part of Austria where I was when I put together this post. The second part, not shown in the screenshot, contains the satellite information for the GPS receiver.

And the cream on top is that the SUPL client on my Android device did NOT check the SSL certificate validity. I did not include the server certificate in the trusted certificate list so the client should have aborted the request during the SSL negotiation phase. But it didn't and thus anyone between me and the SUPL server at Google can get my approximate location by spoofing the request in the same way I did. I'm sure that two years ago, most people would have laughed and said that it's unlikely this could happen or that someone else but my network operator would know my IMSI, but one year post-Snowden I don't think anyone's laughing anymore…

When The Baseband Makes The Query

And now to the really scary part: The next thing I tried was if I could reproduce this behavior with other Android devices at hand. To my surprise the two I had handy would not send a SUPL request over Wi-Fi and also not over the cellular network (which I traced with tcpdump on the device). After some more digging I found out that some cellular radio chips that include a GPS receiver seem to perform the requests themselves over an established cellular IP connection. That means that there is NO WAY to trace the request and ascertain if it contains personal information or not. This is because the request completely bypasses the operating system of the device if it is made directly by the radio chip. At this point in time I have no evidence that the two devices from which I did not see SUPL requests actually use such a baseband chip A-GPS implementation and if there are personal indentifiers in the message or not. However, I'm determined to find out.

And for those of you who'd like to try yourselves I'll have a follow up post that describes the details of my trace setup with two Raspberry PI's, Wireshark, and the SUPL-PROXY software mentioned above.

First Time To Fix – When GPS-only Location Is Good Enough And Protects My Privacy – Sort Of

When I upgraded to my recent smartphone hardware (a Samsung Galaxy S4 running Cyanogen Mod) I noticed that the time it takes to get a first location via GPS (first time to fix) is stunningly quick compared to my previous device. Even after not having used the GPS receiver for more than half a day, it only takes a few seconds until at least 3 satellites have been decoded and a pretty good approximation of my location is being returned.

Obviously using Google's Wi-Fi SSID database gives an even faster first location approximation but I am not very fond of having to consent to periodically gathering SSID and location information for Google to return the favor. But with such a fast GPS startup time I don't need Google's SSID services anymore and thus I have happily switched it off to re-gain some of my privacy. Yes, I know, SSID location gathering should be anonymous but I don't want to do it anyway.

But getting a fast GPS fix means that the device has to ask for satellite location information which also requires a rough location approximation being sent to a server in the network in what is called a SUPL request. More about my 'adventures' to find out more about what's included in such a request in my next post on the topic.

Time Lapses – RTC Drift In My Servers

I always assumed that my Ubuntu based servers would automatically keep their real time clocks synchronized to NTP servers on the net. But when I recently checked the time on my local Owncloud server at home, I was quite surprised that it was off by over a minute. Also, the time on a virtual server I use for my backup SSH tunnels on the Amazon cloud was off by over 30 seconds. It turns out that Ubuntu server only polls an NTP server at system startup and both machines have been up and running without a restart for over a month. Quite an RTC drift for only 30 days, I would have expected far less. The issue can be fixed quite easily with the following entry in /etc/crontab but I wonder why Ubuntu doesn't do it out of the box!?:

# Synchronize date with ntp server once a day and write the result to syslog

00 6 * * * root ntpdate ntp.ubuntu.com | logger