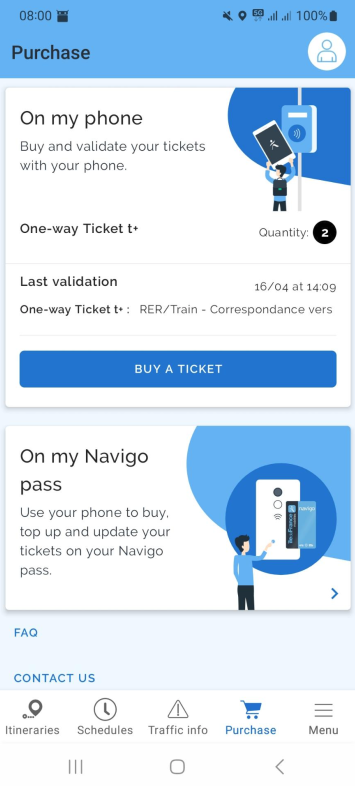

It took many many years, but I finally used an NFC payment service on a mobile device: For paying in the Paris metro! So how well does this work and how does it compare to other cities?

Digitization – More Complicated?

To me, digitization is something out of the 1980’s, but it seems to be a resurrected topic in the 2020’s. In my opinion, digitization should strive to make things simpler instead of more complicated for the customer. Unfortunately, in reality, many services that are digitized are often just as complicated or even more so than than their analog predecessors. There is one exception that comes to my mind, however, and that is how one can pay in the London metro. It’s so simple that I’ve come to see it as the ‘gold standard’ of a service that was digitized. So let’s have a look at London Transport first and then compare the system used by the Paris metro.

Continue reading NFC Payment in the Paris Metro