In the previous post I’ve had a closer at, among other things, the spectrum use of ADSL vs. ADSL2+. The change in uplink and downlink throughput when the line was updated was quite significant. The main difference was made by using the lower spectrum that was previously used for voice telephony as additional spectrum for the DSL uplink and by doubling spectrum use from 1.1 to 2.2 MHz, i.e. from 256 tones (carriers) to 512. Let’s have a look at how ADSL2+ spectrum use compares with VDSL2, which I have at my home.

In the previous post I’ve had a closer at, among other things, the spectrum use of ADSL vs. ADSL2+. The change in uplink and downlink throughput when the line was updated was quite significant. The main difference was made by using the lower spectrum that was previously used for voice telephony as additional spectrum for the DSL uplink and by doubling spectrum use from 1.1 to 2.2 MHz, i.e. from 256 tones (carriers) to 512. Let’s have a look at how ADSL2+ spectrum use compares with VDSL2, which I have at my home.

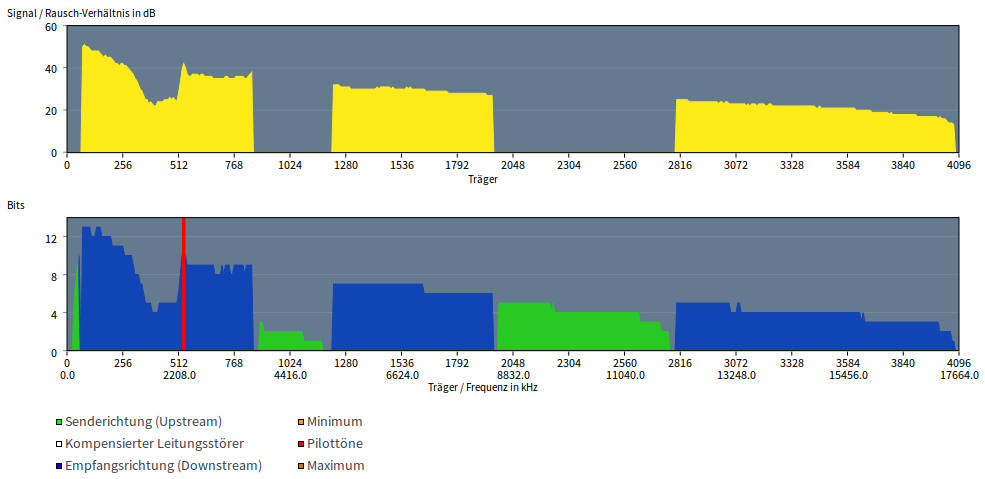

The screenshot on the left can be directly compared to the ADSL and ADSL2+ screenshots in the previous post. The upper yellow graph shows the signal to noise (SNR) ratio of the portions of the spectrum that are used for downlink data transmission. In the lower graph, the number of bits per carrier (tone) is shown. Blue are downlink channels, green are uplink channels. Compared to the ADSL charts in the previous post the following major difference become immediately apparent:

- Spectrum use was significantly expanded from 1.1/2.2 MHz (ADSL / ADSL2+) to 17.6 MHz. Instead of 512 tones, 4096 tones (carriers) are now used.

- To increase uplink data rates to 10 Mbit/s (in my example) several parts of the spectrum are used.

It’s also quite interesting to observe how the signal to noise ratio slowly gets worse as the frequency increases. One thing that would be interesting to know is why the SNR decreases so significantly between tone 256 and 512 and then suddenly significantly improves again. Does anyone with layer 1 expertise have an idea why this is so!?

Also interesting to see is how the number of bits per tone slowly decreases as a result of the worsening SNR.