In the good old fixed line SIP world, the originator of a speech call told the other side which speech codecs it supported. The other side then picked a suitable codec and informed the originator about the choice. That was it and things were ready to go. In VoLTE, you might have guessed, it’s not quite as simple. Today, codecs are rate adaptive and bandwidth for the data stream can be limited by the mobile network to a value that is lower than the highest data rate of a codec family.

Category: Uncategorized

My IPv6 DNS AAAA-Resolver Bug Is A Feature – With A Fix

I’m quite advanced in my IPv6 adventures but there has been one thing that has been holding me back so far: The DNS resolver in my DSL router at home refuses to properly return the IPv6 address of my local server. Instead it doesn’t respond at all to this request. As a consequence my web browser waits for 10 seconds before it gives up and then uses the IPv4 address of the server instead that was returned just fine. This had me baffled for a long time because all other DNS resolvers returned the IPv6 address just fine. Now it turned out that this is not a bug at all but a feature.

Continue reading My IPv6 DNS AAAA-Resolver Bug Is A Feature – With A Fix

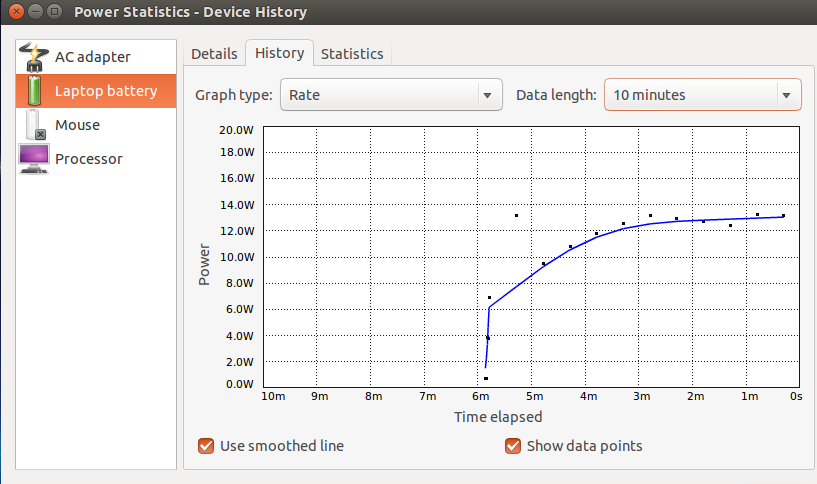

Linux Command To Get The Notebook’s Current Power Consumption

Ubuntu visualizes current and previous power consumption and recharge behavior in a couple of nice graphs as shown in the screenshots above and below. When I have screen brightness quite high but not much else is going on, my notebook draws around 12-13 Watts of power and my battery lasts around 4 hours. Turning up brightness, running a virtual machine or two and streaming a video from Youtube and power consumption is obviously significantly higher. Sometimes it would be nice, however, to not only see a graph but to get a reading of how much power my notebook takes at any point in time. Fortunately there’s a command for that.

Ubuntu visualizes current and previous power consumption and recharge behavior in a couple of nice graphs as shown in the screenshots above and below. When I have screen brightness quite high but not much else is going on, my notebook draws around 12-13 Watts of power and my battery lasts around 4 hours. Turning up brightness, running a virtual machine or two and streaming a video from Youtube and power consumption is obviously significantly higher. Sometimes it would be nice, however, to not only see a graph but to get a reading of how much power my notebook takes at any point in time. Fortunately there’s a command for that.

Continue reading Linux Command To Get The Notebook’s Current Power Consumption

VoLTE – Some Thoughts On Port Usage

Most services on the Internet make use of a single TCP or UDP connection. The client opens a TCP connection from a random port to a well known port on the server (e.g. port 443 for HTTPS) and performs authentication and establishment of an encrypted session in that connection. Simple! In VoLTE, however, things are much more complicated as there are three streams used in practice and TCP and UDP can even be mixed. Continue reading VoLTE – Some Thoughts On Port Usage

EU Roaming Fees Phase Out News

Back in September last year I waded through the legislative proposal to phase out surcharges in the EU in two steps. The first step foreseen for April 2016 is coming closer and my mobile network operator has informed me in my latest invoice of the coming changes for this and also for the next step in mid-June 2017. This makes for interesting reading as this is the first time I have seen something about this topic other than press articles and EU legislative proposals.

7 Days, 15 Terabytes and 1 Kernel Bug Later

Recently I’ve upgraded my notebook to 16 GB of RAM to enable me to run more virtual machines in parallel and to prepare for a 32 to 64-bit OS upgrade. Unfortunately I discovered after a week or so that my disk write speed was deteriorating significantly over time. The more I looked the worse it seemed to get. What followed was a 7 day and night chase and 15+ terabytes of data transfers until I finally found the reason and a workaround.

Continue reading 7 Days, 15 Terabytes and 1 Kernel Bug Later

VoLTE – Some Thoughts About Register vs. Subscribe

When I first came across the VoLTE service registration process I was a bit baffled why it is split in two procedures. The reason for my confusion was that in the circuit switched world there is only one process, a “location update”. Once done that’s it and the mobile can go back to sleep. In VoLTE there is a registration procedure and a subscription procedure that follows. Here’s why:

Continue reading VoLTE – Some Thoughts About Register vs. Subscribe

IPv6 When Roaming

I’ve written about having IPv6 access both at home and on my mobile devices anywhere now a number of times before but actually, it’s not as universal as that after all because there is one loophole…

New Features In The ‘Conversations’ Instant Messenger

In recent releases, developers of the seminal open source ‘Conversations‘ Jabber Android app have added two really cool new features: Group Chat encryption and the ability to edit the last message that was sent.

In recent releases, developers of the seminal open source ‘Conversations‘ Jabber Android app have added two really cool new features: Group Chat encryption and the ability to edit the last message that was sent.

Continue reading New Features In The ‘Conversations’ Instant Messenger

Is There An Upload Time Limit In Owncloud?

In a previous post I’ve explored the steps that are necessary to increase Owncloud’s file size limit to 3GB and checked that such large files can still be downloaded over a ‘slow’ 10 Mbit/s VDSL uplink when each gigabyte takes around 20 minutes to be transferred. In my initial use case I uploaded my large files locally over a high speed link so I didn’t encounter any timeouts. But what if large files are downloaded over slower links, is there a timeout in Apache or Owncloud that aborts the process after some time?