I’ve been using SSDs for many years now and I’m more than happy as the speed difference on a notebook compared to hard disks is just like day and night. Unfortunately I use a one terabyte Samsung 840 Evo in my notebook that has now bitten me for the second time.

I’ve been using SSDs for many years now and I’m more than happy as the speed difference on a notebook compared to hard disks is just like day and night. Unfortunately I use a one terabyte Samsung 840 Evo in my notebook that has now bitten me for the second time.

Category: Uncategorized

LTE And The Number Of Simultaneously Connected Users

Recently I read this very interesting post that analyzed how many users can be connected to an LTE eNodeB simultaneously. As the author says in the article there is no single answer to this as many factors are playing into the game. From a pure uplink signaling point of view there can be well over 1000 users simultaneously in RRC-Connected state. In practice I wouldn’t be surprised if networks set that number way lower as that number is far too high to give anyone a halfway decent speed experience. So how many might there be connected simultaneously to a base station today? Let’s play with some numbers.

Continue reading LTE And The Number Of Simultaneously Connected Users

So Glibc Is Not Statically Linked!?

When I first heard about the news of a serious security vulnerability in glibc I thought that I would soon see a lot of programs of my Linux distributions being updated. That’s because I faintly remember that I once read somewhere that in Linux, libraries are often compiled into the application instead of dynamically linked at runtime. But I guess that was not quite true, at least not for glibc anyway. So far I’ve only seen an update of the glibc library itself. Since then no other programs have been updated due to statically linking that library. So if that holds true we might have been luckier than I thought. Would be nice for a change!

Decoding An 802.11ac Beacon Frame

![]() Wifi 802.11ac with 80 MHz channels (Wave 1) have been on the market for some time now and high end notebooks and smartphones have started supporting the new standards. I’m still stuck with 802.11n on 5 GHz with 40 MHz channels but I do have an 802.11ac capable access point. So while I can’t use the functionality for the moment with my notebook I decided I could at least have a look at the Access Point’s 802.11ac capabilities by doing a Layer 2 Trace and capturing a Beacon Frame.

Wifi 802.11ac with 80 MHz channels (Wave 1) have been on the market for some time now and high end notebooks and smartphones have started supporting the new standards. I’m still stuck with 802.11n on 5 GHz with 40 MHz channels but I do have an 802.11ac capable access point. So while I can’t use the functionality for the moment with my notebook I decided I could at least have a look at the Access Point’s 802.11ac capabilities by doing a Layer 2 Trace and capturing a Beacon Frame.

Ubuntu Has Lost It’s Wifi Tracing Capabilities

When I recently wanted to do a quick Wi-Fi Layer 2 trace on a machine running Ubuntu 15.10 I couldn’t get it working anymore. I was quite surprised because I’ve used this machine many times before for the purpose. Grudgingly I started to investigate…

Continue reading Ubuntu Has Lost It’s Wifi Tracing Capabilities

WiFi Up- And Downlink Speed Disparity

In a previous post I had a look at the possible downlink speeds of my current WiFi setup at home. The exercise clearly showed that my current 5 GHz WiFi with a 40 MHz channel in combination with an Intel Centrino 6205 card in my notebook and a Fritzbox 7490 VDSL router can easily cope with the 50 MBit/s downlink speed of my current Internet backhaul. In the uplink direction, my current line offers 10 MBit/s so also no problem there. One thing I noticed though when transferring files to a local server was that uplink speeds were nowhere near the speed reached when downloading the same file to the server.

Forget About Using Firefox When Testing Network Throughput

After recently upgrading my VDSL line at home, it was also time to upgrade my Wi-Fi equipment to support the higher speeds. When doing some speed tests after the upgrade I noticed to my satisfaction that my Wi-Fi connectivity now easily supports the 50 MBit/s VDSL line. I also noticed, however that downloading a file from a local web server at 100 MBit/s takes a heavy toll on my CPU, it’s almost completely maxed out. At first I suspected the Wi-Fi driver to cause the high load but I soon found out that Firefox is the actual culprit.

Continue reading Forget About Using Firefox When Testing Network Throughput

LTE CA Not For Speed But To Address Rising Demand

So far the tech press pretty much unanimously touts advances in LTE Carrier Aggregation as a way to further increase data rates for customers. With 2×20 MHz Carrier Aggregation a 2×2 MIMO network can be pushed to theoretical peak data rates of 300 MBit/s. 3CA pushes the limit to a theoretical 450 MBit/s and 4×4 MIMO and 256 QAM modulation pushes the limit even further. From my point of view, however, the main benefit of Carrier Aggregation lies elsewhere.

Continue reading LTE CA Not For Speed But To Address Rising Demand

And The Weakest Link In Mobile Security Is…

… the PIN.

Today I read in the news that a smartphone manufacturer has received a “friendly” invitation letter from US law enforcement that asks them to help decrypting a phone of a terrorist that is encrypted. The encryption key itself is protected with the PIN that the user has to type in and software that keeps increasing the delay between two guessing attempts. I am sure the company sympathizes with the general idea of decrypting a device of a terrorist but sees itself unable to comply with the request as this would also significantly weaken security and privacy for the rest of us. If the let someone do it with that phone it can be done with others, too. Once in the wild…

While most of the media is discussing the pros and cons of the move there is a deeper issue here that nobody seems to think about: A simple 4 or 6 digit pin and a bit of software should not protect the ciphering key in the first place.

Continue reading And The Weakest Link In Mobile Security Is…

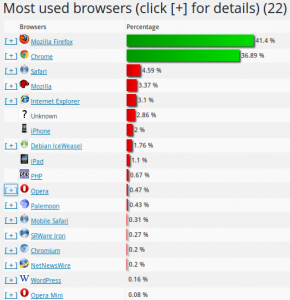

Blog Statistics – The First Weeks

After moving from Typepad to my own WordPress installation, search engines haven’t yet caught up with the change. As a consequence most of the traffic is from regular readers who have updated their bookmarks and RSS feeds after reading about the move from the old site. So before traffic referred to my blog by search engines is increasing I made a snapshot of current statistics to see if regular readers use a different mix of browsers, operating systems and connectivity.

After moving from Typepad to my own WordPress installation, search engines haven’t yet caught up with the change. As a consequence most of the traffic is from regular readers who have updated their bookmarks and RSS feeds after reading about the move from the old site. So before traffic referred to my blog by search engines is increasing I made a snapshot of current statistics to see if regular readers use a different mix of browsers, operating systems and connectivity.