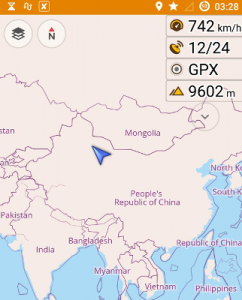

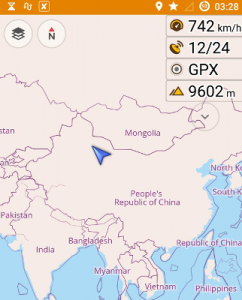

Back in 2011 I had my first in-flight Internet experience over the Atlantic with a satellite based system. Since then I’ve been online a couple of times during national flights in the US where a ground based system is used. In Europe most carriers don’t offer in-flight Internet access so far but an LTE based ground system is in the making which will hopefully have enough bandwidth so support the demand in the years to come. When I was recently flying to Asia I was positively surprised that Turkish Airlines offered Internet access on my outbound and inbound trips. Free in business class and available for $15 for the duration of the trip in economy class I was of course interested of how well it would work despite both flights being night flights and a strong urge to sleep

Back in 2011 I had my first in-flight Internet experience over the Atlantic with a satellite based system. Since then I’ve been online a couple of times during national flights in the US where a ground based system is used. In Europe most carriers don’t offer in-flight Internet access so far but an LTE based ground system is in the making which will hopefully have enough bandwidth so support the demand in the years to come. When I was recently flying to Asia I was positively surprised that Turkish Airlines offered Internet access on my outbound and inbound trips. Free in business class and available for $15 for the duration of the trip in economy class I was of course interested of how well it would work despite both flights being night flights and a strong urge to sleep

While most people where still awake in the plane, speeds were quite slow. Things got a bit better once people started to doze off and I could observe data rates in the downlink direction between 1 and 2 Mbit/s. Still, web browsing felt quite slow due to the 1000 ms round trip delay times over a geostationary satellite. But it worked and I could even do some system administration over ssh connections although at such round trip times command line interaction was far from snappy.

In the uplink I could get data rates of around 50 to 100 kbit/s during my outbound leg which made it pretty much impossible to send anything larger than a few kilobytes. On the return trip I could get around 300 kbit/s in the uplink direction when I tried. Still not fast but much more usable.

Apart from web browsing and some system administration over ssh, I mostly used the available connectivity to chat and exchange pictures with others at home using Conversations. While being mostly available, I noticed a number of outages in the link ranging from a few tens of seconds to several minutes. I’m not sure by what they were cause surely not due to clouds or bad weather above the plane… 🙂

While overall I was happy to be connected I have to say that like in the US, this system is not offering enough capacity anymore and it will become more and more difficult to offer a good customer experience without bumping up speeds significantly.