I recently wanted to check where and how much LTE and 5G network coverage one of the German network operators really has these days. The problem: I couldn’t find coverage maps provided by that network operator on the Internet. But what I found instead was the maps of the Bundesnetzagentur, the German telecoms regulator, that show the fixed and mobile network availability of all German network operators. I had no idea that this website existed and I was positively surprised of how detailed the information is that is provided. So if you want to compare or just find out which network is available in a certain town, street or location, have a look there instead of trying to find coverage maps on the web sites of individual companies. Very nice! If you are aware of such coverage maps of network operators / regulators in other countries, please consider leaving a comment. Thanks!

Author: Martin

Hyperscalers at Home

I recently attended a session that focused on how to provide cloud native hardware and software in a telecoms environment today. In other words: How can network operators deploy hardware and software in their data centers to build Kubnernetes clusters, huge storage capacities and ultra fast networking that are the basis today for all kinds of (5G) telecom workloads? Such workloads are for example the 5G core network functions, think 3GPP AMF (Access Management Function), SMF (Session Management Function), UPF (User Plane Function), but also the the subscriber database, legacy workloads such as a virtual EPC (LTE core network), IMS functions for voice calls, etc. etc.

The theory is easy: Roll-in some hardware, put Kubernetes on it and then deploy 3GPP software components into them. After all, the idea is that Kubernetes is Kubernetes, and workloads running in the cluster are shielded form the hardware setup below.

In reality, however, it’s not quite that straight forward…

Continue reading Hyperscalers at HomeUniversity of Vienna’s Eduroam Certificate Hopping

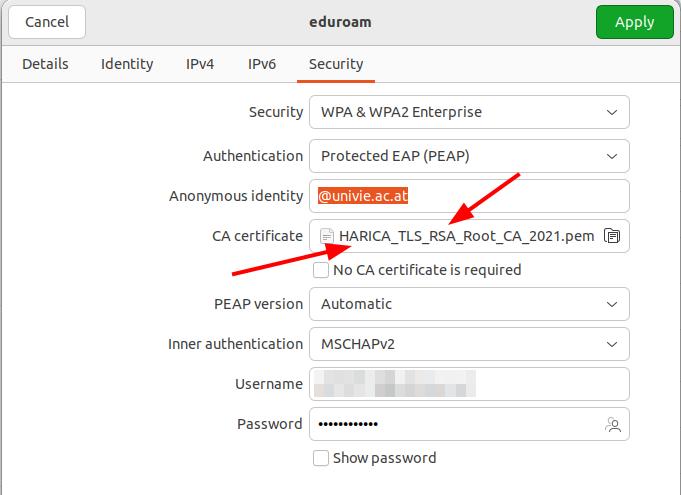

Recently, the Eduroam Wi-Fi access, courtesy of the University of Vienna of a device I administer, stopped working. At first I thought it was a temporary outage but after a few days, that theory went out the window, as connection establishment kept failing. A quick look at the /var/log/syslog revealed that the certificate check failed. But why and why now?

Continue reading University of Vienna’s Eduroam Certificate HoppingThe Online Office Inflection Point – 2025

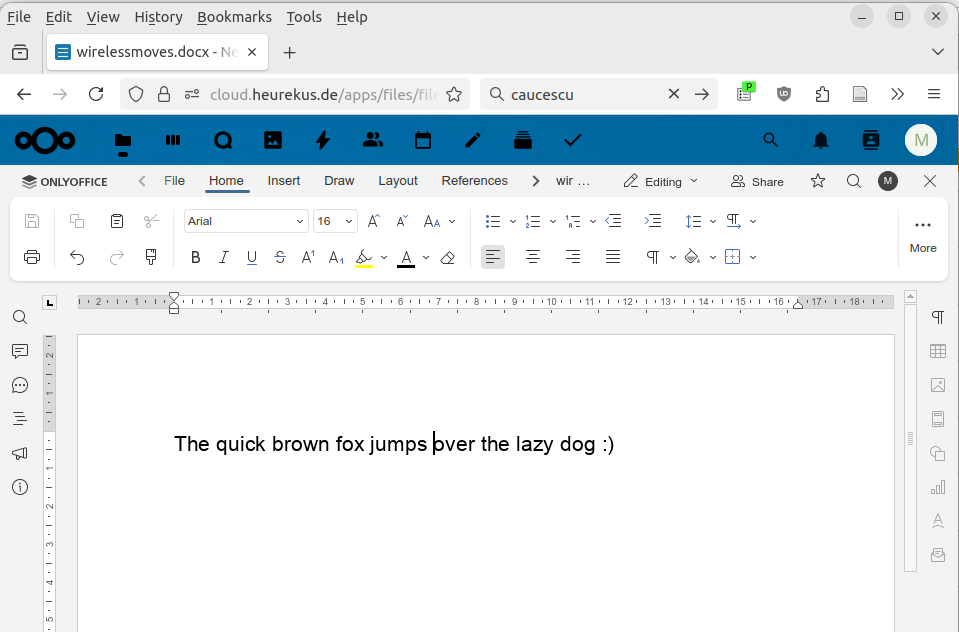

I recently noticed just how much I use my online office suite in Nextcloud these days and I have definitely reached a point where I use it more than I use Libreoffice installed on my notebook. There are a number of reasons for this.

Continue reading The Online Office Inflection Point – 2025Back to the Roots – Ultra Mobile Work Instead of Taking the Notebook

It’s been almost 20 years! Back in 2008, I wrote about the equipment I used to report from Mobile World Congress: My smartphone and a Bluetooth keyboard. This worked quite nicely for some time and I used the setup for many occasions. Eventually, however, notebooks got lighter and smaller and the size and mobility of a smartphone in combination with a foldable keyboard vs. versatility of a notebook shifted towards the notebook. For almost two decades! But now, ultra mobile work and productive quality time with the smartphone is back for me!

Continue reading Back to the Roots – Ultra Mobile Work Instead of Taking the NotebookRustDesk – Part 3 – Project Setup and Network Traffic

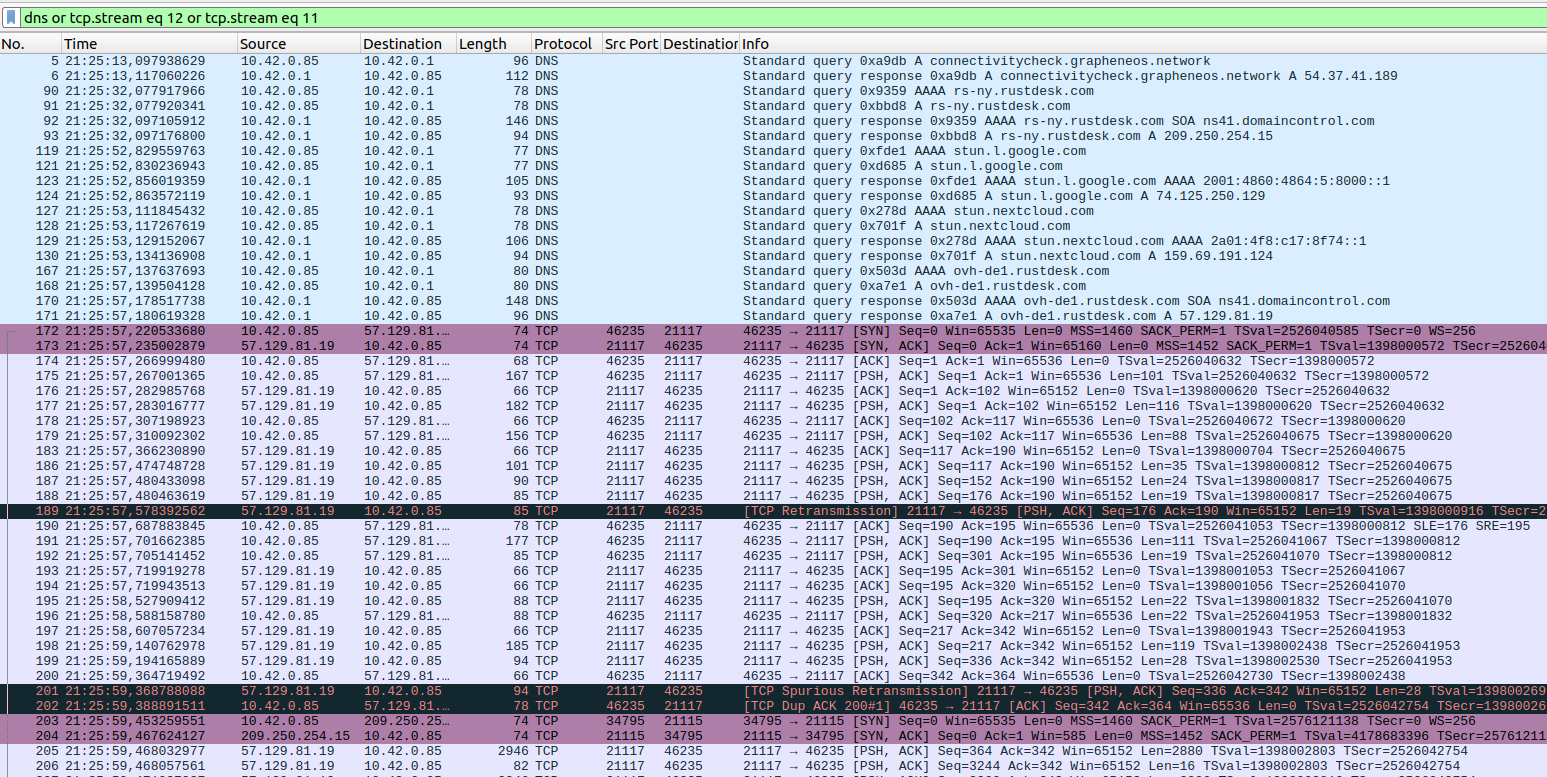

And on we go with yet another look at Rustdesk, a cool software for remote support, particularly for smartphones in my case: How does the project set-up look like and how does it transfer data over the network?

Project Setup, Licenses, Code Availability

Before coming to the network traffic, I decided to say a few words about the project setup, because I find it a bit strange. When looking at their website, an address for a company office in Singapore is given. On a different page, it is stated the the laws of the Cayman Islands apply. And that’s pretty much it. In other words, it looks a bit strange.

On the positive side, the server and client software is hosted on Github under the AGPL-3.0 and MIT licenses, so the code can be inspected and forked. Another plus is that the client is available on F-Droid, where it is compiled by the project rather than by the author. Also on the positive side, the project’s documentation and FAQ is very extensive, so it looks to me like it is currently well maintained. Also, apart from the free community edition, they do have paid plans with extra features, so they probably have a sustainable setup.

Continue reading RustDesk – Part 3 – Project Setup and Network TrafficRustdesk – Part 2 – From Mobile to Mobile

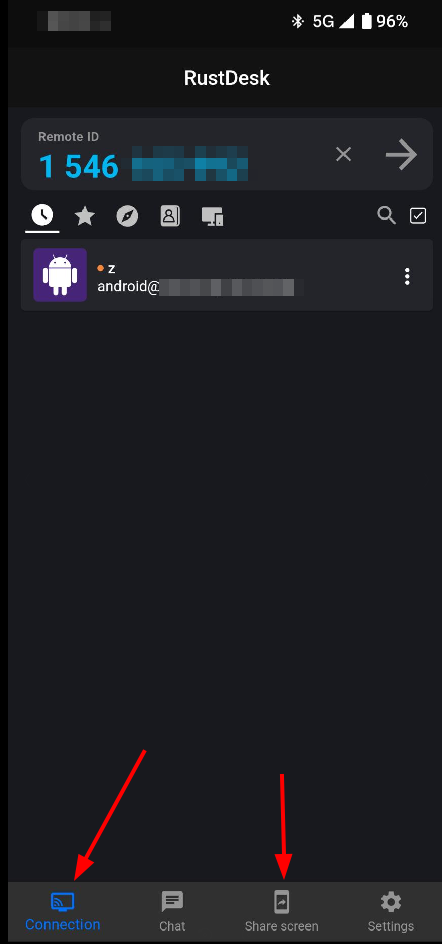

In the previous post I had a look at how to give remotely support an Android device from a notebook. While it’s a bit of a setup orgy, once it’s on Android and all permissions are granted, it works really well. An interesting twist: The RustDesk Android app contains both, client and supporter roles. So is it possible to support an Android user from another Android smartphone?

And indeed, it’s possible and works very well. Connecting to the remote mobile device works just as it does from the notebook, and touch interaction on the other device is possible as well. There is of course a bit of a lag when tapping a button remotely, and keys on the remote on-screen keyboard have to be pressed slowly to prevent missing or wrong characters. This is no big issue, however, and I am very impressed. I’ve been waiting for this for many years!

The screenshot on the left shows RustDesk’s main window on Android. The ‘Connection’ button on the lower left opens the tab in which a RustDesk remote ID can be entered to establish the connection to a remote device to support. And the ‘share screen’ button opens the tab where the remote party can start the screen sharing session and allow the incoming connection.

Remote Android Support with Rustdesk – Part 1

‘Mobile First’ has definitely arrived which made me wonder a bit how I could remotely support people on their mobile devices in the future as some will probably drop their notebook entirely. One option that looks particularly appealing to me is the open source RustDesk app. It can either be used via the project’s server on the net to connect a device to be supported with the notebook of a supporter, or it can be used with a self hosted server if one fancies to control the full transmission chain. Let’s have a look how this works in practice.

Continue reading Remote Android Support with Rustdesk – Part 1Lithium-Ion Batteries and Temperature

Earlier this year, I wrote a post about being surprised that my Anker A1336 72 Wh power bank, which I very much like by the way, has an interesting charge and discharge behavior when it gets warm. While it can be charged and discharged at 100W and can even be charged and discharged at the same time, as soon as it reaches around 42 deg Celsius on the inside, it stops charging other devices and continues to charge at only 22W. At the time I was wondering a bit why thermal protection was kicking-in at such low temperatures. However, when I recently researched the topic a bit more, I found out that this is actually the proper and expected behavior.

Continue reading Lithium-Ion Batteries and TemperatureFor NFC Payment, I Turn Off NFC on my Mobile

Yes, this is a bit of a strange headline, but I’m not kidding. For NFC payment ‘with’ my mobile device, I actually turn-off NFC to make it work.

Continue reading For NFC Payment, I Turn Off NFC on my Mobile