In the previous post I had a look at how to give remotely support an Android device from a notebook. While it’s a bit of a setup orgy, once it’s on Android and all permissions are granted, it works really well. An interesting twist: The RustDesk Android app contains both, client and supporter roles. So is it possible to support an Android user from another Android smartphone?

And indeed, it’s possible and works very well. Connecting to the remote mobile device works just as it does from the notebook, and touch interaction on the other device is possible as well. There is of course a bit of a lag when tapping a button remotely, and keys on the remote on-screen keyboard have to be pressed slowly to prevent missing or wrong characters. This is no big issue, however, and I am very impressed. I’ve been waiting for this for many years!

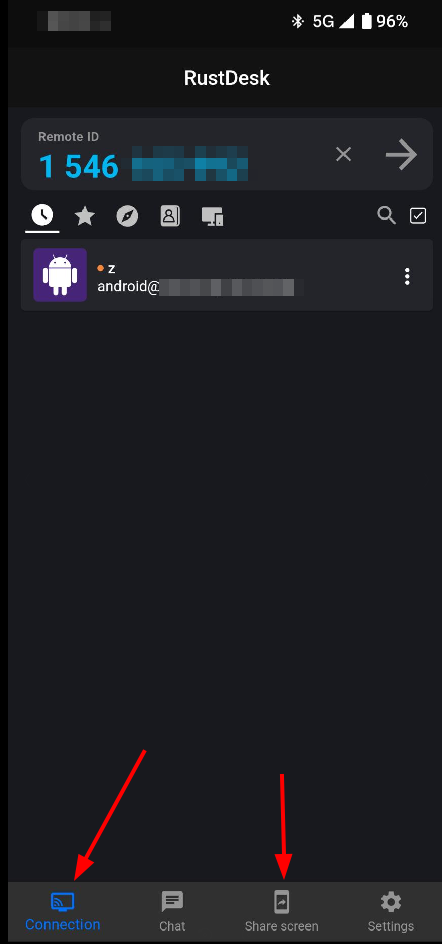

The screenshot on the left shows RustDesk’s main window on Android. The ‘Connection’ button on the lower left opens the tab in which a RustDesk remote ID can be entered to establish the connection to a remote device to support. And the ‘share screen’ button opens the tab where the remote party can start the screen sharing session and allow the incoming connection.