If you are in the business of looking at decodes of LTE or 5G signaling messages, you’ve probably been at the point when you wanted to copy and paste a part of that message into an email. The problem: More often than not, the parameters you are interested in are deeply nested in other parameters. 60 to 80 spaces in front of parameter lines are not out of the ordinary these days. Looks pretty ugly when copied and pasted and the result is often totally unreadable. So I was looking for a way to remove a specific number of spaces of all lines of a text file. Should be an easy Google search I thought, but the result was quite disappointing.

Continue reading Removing Indentation from 3GPP Messages – ChatGPT vs. Google SearchWi-Fi Bandwidth Vs. Range Vs. Throughput

In the previous post on using a Wi-Fi channel in the 2.4 GHz band connecting my Starlink terminal in a courtyard to an in-house receiver, I noticed that the 20 MHz channel was limiting my speed to around 60 Mbps. That’s far less than what Starlink supports and also far less than the roughly 100 Mbps that a 20 MHz 802.11n channel supports in the 2.4 GHz band on the IP layer (130 Mbps on the PHY). At first, I thought that there is little that can be done about it, as the channel is clearly power limited. Focusing the signal energy in a certain direction might help, but there are no antenna connectors on the Starlink Wi-Fi router to which I could connect directional antennas. But then I noticed an interesting effect when increasing the channel bandwidth.

Continue reading Wi-Fi Bandwidth Vs. Range Vs. ThroughputStarlink – Part 14 – Dishy Among Trees and Hills

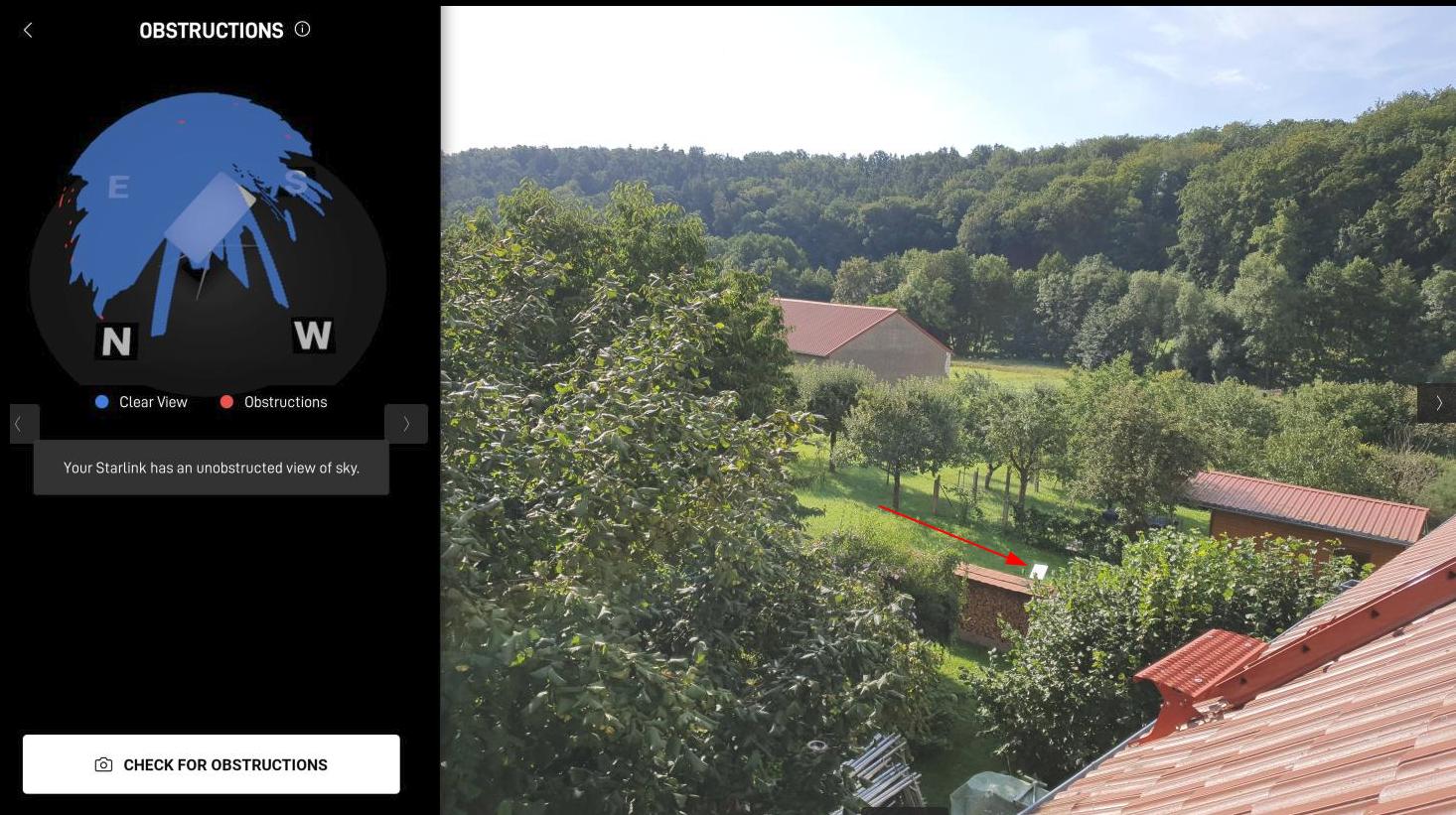

And further I went into ‘terra incognita’ with Starlink, this time to a place which was quite challenging in terms of terrain. But again, looking at the bright side of it, it was the first time I had to experiment for a while to find a good spot to place ‘Dishy’.

Continue reading Starlink – Part 14 – Dishy Among Trees and HillsStarlink – Part 13 – Local Wi-Fi on 2.4 GHz

In the previous post, I had a look at the 5 GHz Wi-Fi of the Starlink router at close range for maximum throughput. In this post, I’ll have a look at the completely opposite scenario: How Starlink’s Wi-Fi performs when used to bridge a larger distance. This wasn’t actually a theoretical test, but the scenario I bought my Starlink terminal in the first place: Fast Internet connectivity in ‘underserved’ places. As you can see in the picture above, I put the satellite antenna itself in a courtyard, while the router and the 230V power supply (more about that in a follow up post) was in the car next to it.

Continue reading Starlink – Part 13 – Local Wi-Fi on 2.4 GHzStarlink – Part 12 – Local Wi-Fi on 5 GHz

Out of the box, a Starlink terminal comes with the satellite dish, affectionately called ‘Dishy’, and a Wi-Fi Router. As an Ethernet port is not part of the package and has to be ordered separately, Wi-Fi is the only default connectivity option. So how does that Wi-Fi Access Point perform?

Continue reading Starlink – Part 12 – Local Wi-Fi on 5 GHzStarlink – Part 11 – Obstruction Diagram

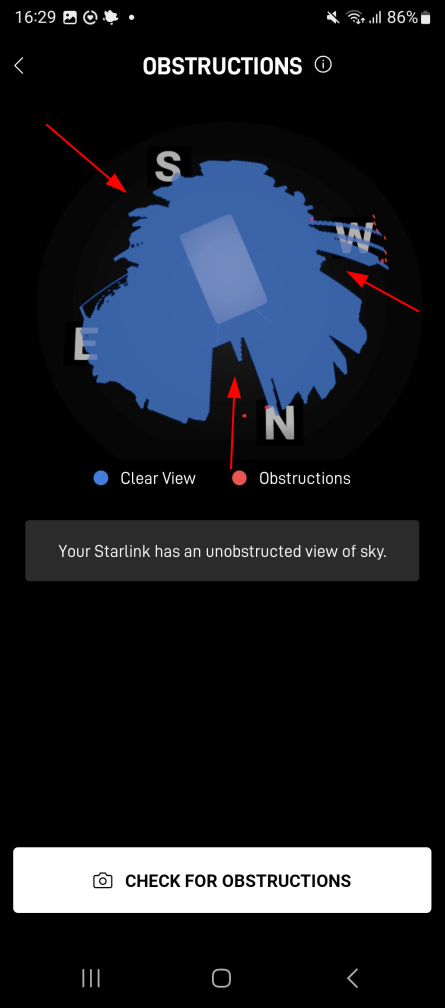

How much of the sky can be obstructed for Starlink to still work without connectivity breaks (in Germany)? This has been one of the most important questions I had about Starlink before I could try it out on my own.

Here’s an image that shows an obstruction diagram, which the Starlink app on a smartphone produces after ‘Dishy’ has been up and running for about 6 hours. The blue sphere shows the parts of the sky that are visible, while the black area shows where obstacles are in the way. The red arrows were put into the image by me. For this image, the Starlink dish was at my rooftop, and the sky was blocked as follows:

1) About half the sky to the south was completely blocked by a near vertical part of roof, which is about 2.5m high (red arrow on the top left). Dishy was about 0.5m away and hence, this part of the roof pretty much obstructed half of the sky.

2) Towards the north, there was a solid obstacle about 1 meter away and about 1.5 meter high which is also shown nicely on the image (red arrow at the bottom.

3) And finally, there was another 1.5m high obstacle towards the west, also around 1m away from the antenna, which also blocked the sky.

Continue reading Starlink – Part 11 – Obstruction DiagramStarlink – Part 10 – Windows over Starlink – Uplink Performance

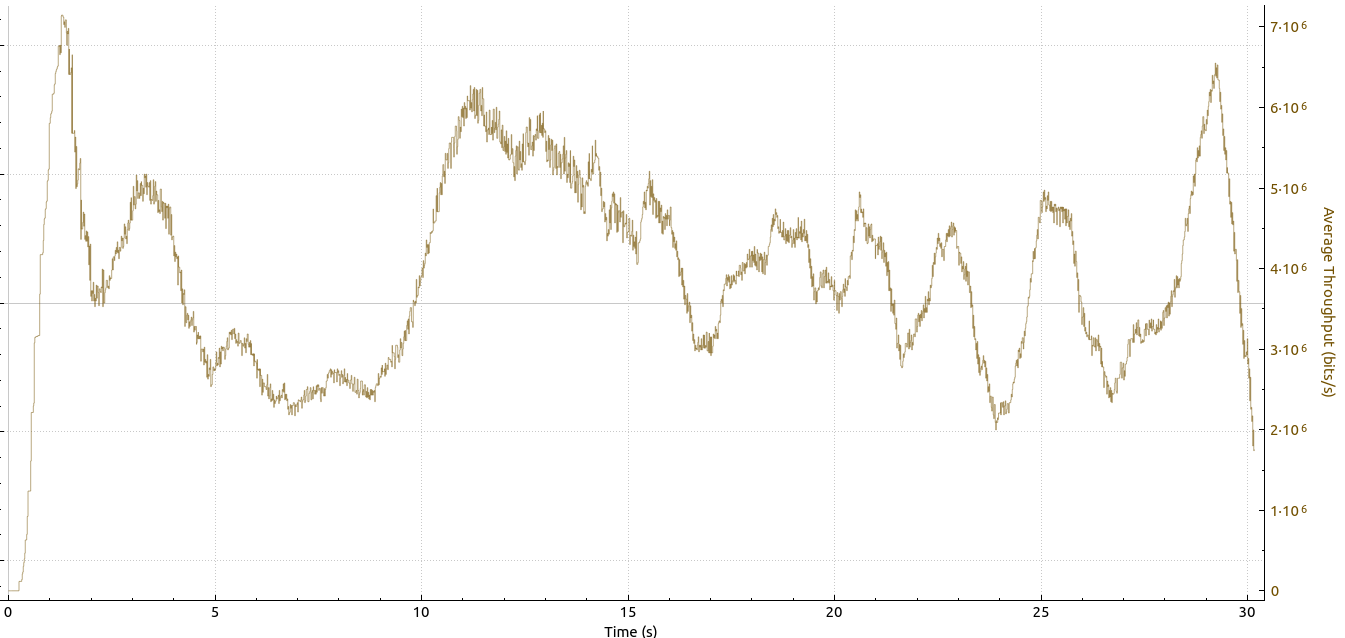

Part 8 of this series took a closer look at the downlink performance of two Windows 11 based machines over Starlink. This episode now takes a closer look at the uplink.

Before I go on, let’s set expectations: As was shown in part 5 and 6, there is a significant difference in uplink performance on Linux machines depending on which TCP congestion avoidance algorithm is used. With the default TCP Cubic algorithm, throughput was around 5 Mbps, whereas TCP BBR pushed uplink throughput to 30 Mbps.

So here’s how uplink throughput looks like on Windows with the default TCP parameters:

Continue reading Starlink – Part 10 – Windows over Starlink – Uplink PerformanceStarlink – Part 9 – Speedtest.net and LibreSpeed

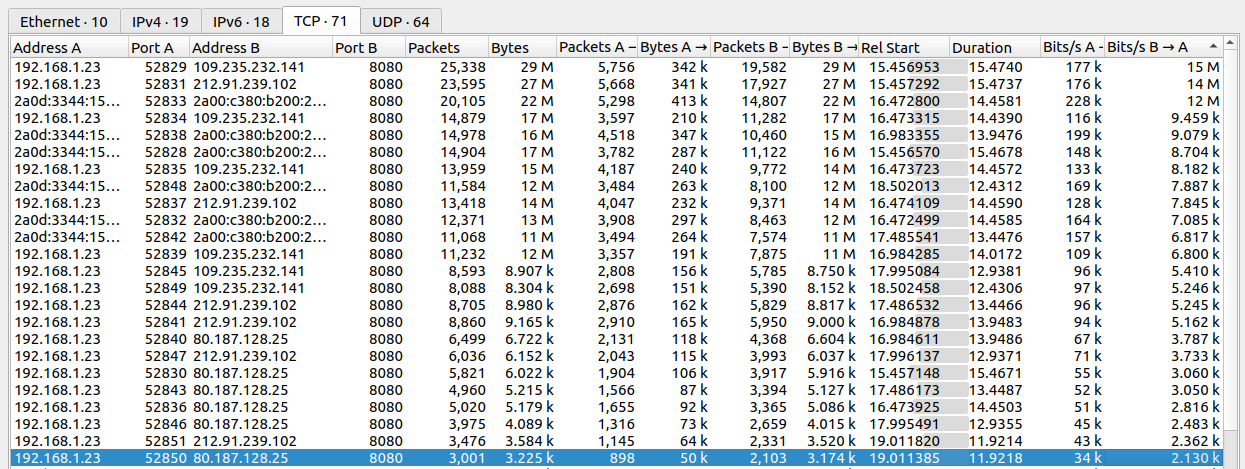

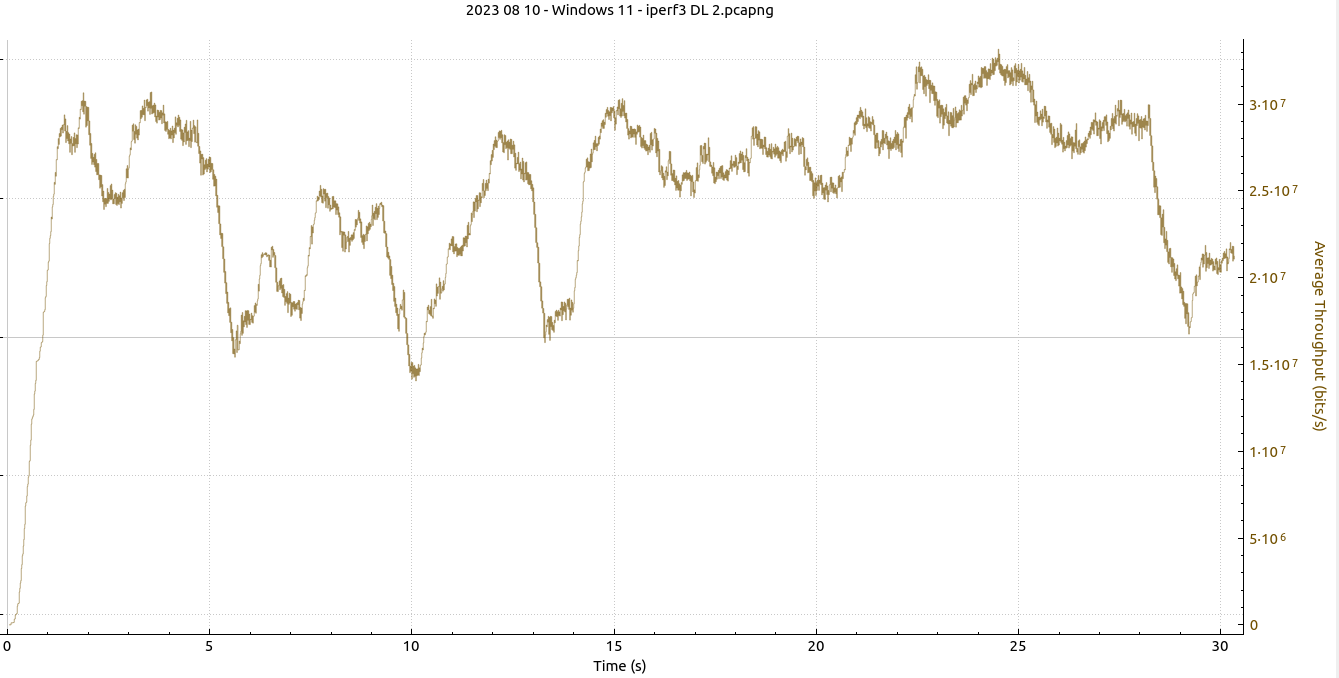

In part 8 of my Starlink series, I’ve been looking at downlink throughput with a Windows notebook. The maximum speed I could achieve with iperf3 was around 30 Mbps, while tools like speedtest.net and LibreSpeed could easily reach 150 to 200 Mbps. So what’s the difference and which speed test reflects real world use?

Continue reading Starlink – Part 9 – Speedtest.net and LibreSpeedStarlink – Part 8 – Windows over Starlink – Downlink Performance

In my previous posts on Starlink, I’ve used Linux as the operating system on my notebook and on the network side server for my iPerf3 throughput measurements. Due to packet loss on the link, there is a significant difference between using different TCP congestion avoidance algorithms. When the standard Cubic algorithm is configured on both sides, the data transfer over a single TCP connection is 5 times slower than with BBR. So what happens when I use a Windows client over Starlink?

Continue reading Starlink – Part 8 – Windows over Starlink – Downlink PerformanceStarlink – Part 7 – Voice over Starlink

After seeing the many transmission errors over the Starlink air interface that TCP can handle quite well, particularly on Linux with the BBR congestion avoidance algorithm, I was of course wondering if this has an audible impact on real time voice services when used over Starlink. So here’s how that went:

Continue reading Starlink – Part 7 – Voice over Starlink